7 Bicycles for the Mind: How GenAI was used to enhance critical thinking in the classroom

Russell Manfield; Alice Hawkesby; and Nicholas Nucifora

How to cite this chapter:

Manfield, R., Hawkesby, A., & Nucifora, N. (2025). Bicycles for the mind: How GenAI was used to enhance critical thinking in the classroom. In R. Fitzgerald (Ed.), Inquiry in action: Using AI to reimagine learning and teaching. The University of Queensland. https://doi.org/10.14264/d4aaaf6

Abstract

This chapter demonstrates how generative artificial intelligence (GenAI) can be used to foster, rather than corrupt, critical thinking in higher education. Conducted in an undergraduate Digital Innovation course at the University of Queensland in Semester 1, 2025, the study engaged 88 students across five topic clusters encompassing artificial intelligence, blockchain, wearable internet of things (IoT), genomics and cybersecurity. Students were encouraged to use GenAI tools transparently in preparing debates, creating and moderating peer resources, and completing case studies, while the researchers simultaneously trialled AI-assisted grading to compare against human marking. Data was drawn from surveys, interviews, and some 1,600 assessment artefacts. Students used GenAI to leverage content creation, valuing its ability to enhance comprehension and efficiency, but continued to prefer human feedback for depth and context. Overall, the study suggests GenAI can serve as a “bicycle for the mind,” enhancing critical thinking when integrated purposefully into tertiary teaching practice.

Keywords

critical thinking, artificial intelligence, generative AI (GenAI), tertiary learning, bicycle for the mind.

Practitioner Notes

- Encourage transparent GenAI use by asking students to share prompts and outputs, normalizing critical engagement rather than secretive reliance.

- Embed peer-to-peer moderation to highlight when GenAI undermines, rather than supports, critical thinking—students often give sharper “AI call-outs” than staff.

- Use action learning cycles (interventions, reflection, re-testing) to capture and build on evolving student practices with GenAI.

- Consider GenAI as a “bicycle for the mind”: a tool to scaffold comprehension and expression while still valuing human judgement, nuance and feedback.

- Pilot GenAI for marking consistency checks but retain human oversight—current models tend to over-reward submissions lacking genuine critical analysis.

Introduction

Many academics and indeed many students, are frightened by the disruptive influence of generative artificial intelligence (GenAI), especially as this impacts university teaching in delivering prescribed learning outcomes (Potkalitsky, 2025). The fear among higher education teachers is that GenAI models serve as easy workarounds for undertaking the hard work of critical thinking in a dynamic world that demands incisive analysis and empathetic insight. For teachers, GenAI tools can challenge the integrity of content and assessments that they have become accustomed to deliver by ‘offloading’ critical thinking (Gerlich, 2025). Meanwhile, students often approach these models as accessible tools to generate assignments, gaining at least adequate marks, without necessarily needing to fully understand the content being exchanged nor any limits to its application in a complex and ambiguous world. This chapter shares our experience in directly addressing these fears in a live learning setting.

In a recently published opinion piece by two university academics, the authors lay down a very real yet promising challenge to all university lecturers, arguing that GenAI represents the “death knell” for critical thinking in our teaching and learning environments (Moran & Wilkinson, 2025). In a direct response to this provocative and most welcome challenge, we sought to disprove their contention in an active learning environment. Their letter was published days before our first lesson on GenAI for an undergraduate Digital Innovation course with 88 enrolments offered at the University of Queensland (UQ), Australia. Working together, the first author (as course co-ordinator) teamed up with the co-authors (as student research partners enrolled in the course), using this opinion piece as a contrarian provocation.

Students were tasked to engage with GenAI in exploring the five topic clusters (artificial intelligence, blockchain, wearable internet of things, genomics and cybersecurity) in completing each of their assessments. Since the course architecture had been built around a sequence of tasks that required peer assessment, we adopted an action learning orientation (Ballantyne et al., 2002) to allow us to introduce interventions and then to gauge their impact on critical thinking. This approach allowed us to examine the correlations between frequent GenAI tool usage and critical thinking abilities (Gerlich, 2025).

Research Design

We approached this task in two ways. Initiallly, we set out to examine the student experience of using GenAI by enacting a series of classroom interventions, across both surveys and interviews, where students shared examples of their GenAI use. This sharing fostered open discussion in class of benefits and limits, highlighting examples where GenAI appeared to enhance critical thinking as well as testing multiple components of institutional policies related to GenAI use (Ajjan et al., 2025). This strand of the research was led by the second author, Alice Hawkesby, in her role as a student partner on the project.

Then and largely in parallel, we tracked student marks (as awarded by the course coordinator, manually marked) across the assessment trajectory to explore how a GenAI model could grade student work with similar effectiveness as human marking. Marks were awarded for both the resources students submitted and the peer moderations they completed. The course compromised three main assessments: A1, a team debate across five topic clusters; A2, the creation and moderation of resources following each debate; and A3, an individual case study. Transparent use of GenAI was encouraged in each of these assessments. This line of inquiry was led by the third author.

Taken together, our research design sought to track both the student experience in their quest to enhance critical thinking as well as broadening the scope of feedback that drives skill development, for student and teacher alike. In this way, this research positioned learners and educators as co-design partners in forming actionable steps towards learning objectives (Singh et al., 2025)

The Research Process

Our research explored how GenAI usage could enhance critical thinking, to challenge the prevailing notion that such use equates to cheating or avoidance of thoughtful analysis (Gerlich, 2025; Moran & Wilkinson, 2025; Potkalitsky, 2025)[1]. Our process initially sought to understand student attitudes to GenAI and how they enacted those attitudes with real-time usage. Emerging through the early stages of the course delivery, this process expanded to also consider how GenAI capabilities could be applied to improving student feedback for marked assessments (cf Singh et al., 2025).

To capture the student experience of using GenAI, we applied four intervention phases. The first three phases comprised surveys that attracted a random array of student responses while the fourth phase comprised students selected for interview[2] with the second author.

Each topic cluster was explored via a multi-team debate serving as the A1 assessment arc. Students self-selected and researched their topic then presented their findings with the class in a debate format. GenAI tools were core to this research process. After each debate, all students, including those that had just presented, created a resource positioning what they had learned from the debate, to focus on at least one key insight. These resources were then moderated by peers, with each student moderating three other resources, creating a circular learning trajectory designed to deepen understanding and foster critical thinking. Across the five clusters, this cycle generated some 1,600 individual items for the A2 assessment each of which was hand marked by the course coordinator against the rubric shown as Appendix B – Marking rubric for the resource/moderation task set. The individual case topic completed the assessment arc as A3 but since this component contained no circular learning activities, it falls outside our current scope.

To investigate the efficacy of GenAI in marking student assessments, we uploaded the de-identified content of the resources each student created and the moderations that were applied across those resources, for each of the five topic clusters. No information was provided to the GenAI model that would identify any student.

Research Methodology

Action research is a method where interventions are applied into a live change setting, to observe what happens after each intervention. This cyclic nature allows different process aspects to emerge in real-time (Ballantyne et al., 2002) across each intervention.

From the outset, students were encouraged to use GenAI tools to explore the topic debate and to assist in constructing their moderations of created responses. We then offered a survey to all the students in three interventions, receiving anonymous responses as noted in the footnote 2. The three student surveys each had slightly different emphases. The first survey gathered baseline information on students’ GenAI tool use, including frequency, purpose, perceived usability, and initial benefits or challenges. The second survey, conducted after the debate and peer-moderation activities, asked students to reflect on how GenAI supported or hindered their performance in these structured tasks. The third survey focused on perceived changes across the semester, including shifts in confidence, independence, and learning strategies.

By observing the engagement with the debates, resources and moderations, we identified five students most active in transparently incorporating GenAI into their research and presentation. These five students were invited for interview as the final intervention phase, with Appendix C containing the interview protocol and selected quotations that illustrate how students engaged with GenAI across the interventions. After each intervention, we shared the observations with the class, demonstrating how GenAI was being used across the cohort and highlighting collected evidence that either affirmed or contradicted the received “death knell” premise (Moran & Wilkinson, 2025).

Evaluation

In one of our early lectures, we discussed a study of the most efficient locomotion by body-weight for various types of animals (Wilson, 1973). The most efficient unaided locomotion (measured as calories consumed per kilometre travelled per kilogram of body weight) was the American condor (with humans being less efficient than a horse but more efficient than a dog) whereas, with tools, the most efficient by some degree was recorded as being a human riding a bicycle. This study informed the quote by Steve Jobs of Apple fame, a decade or so later, that computers represent the “bicycles for the mind” – ‘bicycles’ that we can harness to achieve things the human brain is not so good at, to allow us to focus on those things we are very good at. The same reasoning can be applied to harnessing GenAI and that same dynamic is revealed – while not yet fulfilled – from our data.

Through our multiple interventions throughout the arc of the course, we observed how students began with simple explanations and summaries but progressed to more sophisticated and transparent applications drawn on GenAI-derived advice. These GenAI-suggested applications included scenarios that those students then enacted in class, detailed rubric-based feedback drawing on assessment resources they had created for moderation by other students, and subsequent appraisal of those moderations. The final A3 assessment also demonstrated how some students greatly increased in confidence given consistent GenAI encouragement though the semester, where GenAI usage was NOT equated to cheating but rather exposing analyses that otherwise would have remained hidden. Nevertheless, GenAI usage to avoid critical thinking was often called out in the A2 assessment, by both the course coordinator and, most interestingly, other students. These ‘call outs’ were scattered throughout the moderation feedback: often taking the style of “this looks like something generated by AI” followed by suggested analytical improvements. Being a peer-to-peer comment, we figured the feedback loops may have had far greater impact on student perceptions than the course coordinator issuing directions; alas, our data is not sufficient to quantify this conjecture!

Across all the interventions, nearly all students reported using ChatGPT – perhaps through brand recognition – with many seeming to affirm the fears of Prof Moran and Dr Wilkinson (2025) in avoiding critical thinking. At the early stage of the course, the vast minority[3] were confident enough to share which tool they had used or the prompt(s) they entered and how they gauged the responses as suitable – or not – for the task to hand. This transparency rate grew substantially as the semester progressed but remained a minority for the final topic cluster.

Findings: Student experience with using GenAI

From the start of the course, we sought to capture the student experience of using GenAI to enhance critical thinking through a series of general surveys and targeted in-depth interviews. A typical survey response stated that “without (Gen)AI sometimes it’s hard to find a place to start on assignments” signalling that such tools frame initial thinking. As the semester progressed and students increased their own GenAI repertoires, typical comments developed into “I’ve always understood concepts but sometimes struggle to find the academic language to explain it in report form” signalling a more targeted expectation of GenAI outputs.

Overall, a total of 161 exchanges were collected from participating students throughout the teaching semester exploring GenAI usage across all three assessment categories. Our research reveals that students are already discovering this ‘bicycle’ effect: from our course, nearly 80% rate GenAI as helping them understand course content better than lecturers alone yet, remarkably, 100% of students still value lecturer feedback more than identical GenAI feedback. This suggests students intuitively understand GenAI’s role as an enhancement tool rather than a replacement channel.

Our findings were surprising on a number of fronts. Because we tackled head-on the assumption that GenAI presents a tool to avoid work and therefore critical thinking, within an institutional context where GenAI use is so commonly associated with ‘cheating’ by academics and students alike, our data highlights the genuine struggles students navigate in seeking to make best use of GenAI tools as these rapidly emerge. By confronting the assumption that GenAI is a shortcut to avoid work and critical thinking so representing the next generation of cheating, we uncovered the genuine struggles students face as they try to use these rapidly emerging tools effectively.

The catalyst for this evolution arose as students reported they started using GenAI more confidently after seeing classmates succeed with it. Yet many of these students needed to be driven to be transparent with their GenAI use, a transparency which in-class peer support helped to cultivate and which the ‘AI as cheating’ bias serves to subvert. As an interesting example, one student reported how they instructed their preferred GenAI model to adapt their first draft of a resource they had created with the instruction to adjust it in line with the marking rubric to yield a perfect score. Despite multiple iterations and deliberate alignment with assessment criteria, the final submission fell short of full human-awarded marks, even with a guarantee of a 100% score by the GenAI model. This experience underscored both the complexity – and perhaps ambiguity – of academic evaluation as well as the limits for GenAI in delivering on performance promises. So perhaps Prof Moran and Dr Wilkinson (2025) aren’t entirely wrong!

As a final intervention, the emergent findings of this action research was progressively shared with the class to highlight the analytical advantages within reach, as evidenced by exemplar assessments. The intention behind this sharing was to frame an ongoing capability development arc for each student in relation to their continuing GenAI usage. We don’t necessarily seek to change everyone, since innovation often begins with outliers rather than broad averages. This data signals such outliers in achieving broadly beneficial behaviours to burst idea bubbles and explore novel knowledge arenas (Lazar et al., 2025). Beyond seeking to demonstrate that GenAI enhances critical thinking, we aim to cultivate conclusions that otherwise would never have been reached, absent those tools. While we’re not claiming to be there yet, the direction of improvement has become clearer. We now will repeat this process in a bid to replicate and extend these positive learning outcomes.

Enhancing marking consistency with GenAI

The A2 assessment arc required each student to create a resource and for those resources to be moderated by at least three other students, following each of the topic debates. This arc served to further a circular co-learning environment and generated over 200 accessible items per debate topic, challenging marking consistency against the rubric (see Appendix B – Marking rubric for the resource/moderation task set). Commonly a few students would approach the course coordinator with questions framed as “for the last moderation, I was 1 mark off a perfect score. Why did I lose that mark?” Now, this may seem a ridiculous question, as if the coordinator could, off the cuff, recall that students’ resource and adjacent moderations to give an informed answer. On reflection, this is an extremely reasonable question for a student to raise in a bid to improve their performance – not only by gaining extra marks but also by sharpening their own critical thinking!

These experiences manifested in two ways; one of which was explored through the course and the other deferred to a later time (see the Future Directions section that follows). We had in our possession all of the resources created by all of the students, all of the moderations undertaken by all of the students and all of the marks awarded by the coordinator for each student for each topic cluster. In short, a lot of data!

So, our first experiment involved using Claude to independently mark the resources and moderations created by students and to compare these marks against human scores. This test would allow us to gauge the reliability of GenAI-generated marking. With the use of Anthropic’s API[4], we configured Claude to provide marks against student work by closely following the marking rubric, as can be seen in Appendices D and E. To do this, it needs access to the students submitted work, which is also sent to Anthropic’s servers. All student identification was stripped away, as only their submitted work was needed for GenAI grading[5].

The marking for the resources each student created (one resource for each topic cluster) was differentiated from the moderations each student submitted (three moderations for each topic cluster). The prompts we used are shown in Appendix D (for resources created) and Appendix E (for moderations submitted).

Findings: GenAI marking as a precursor to individual feedback

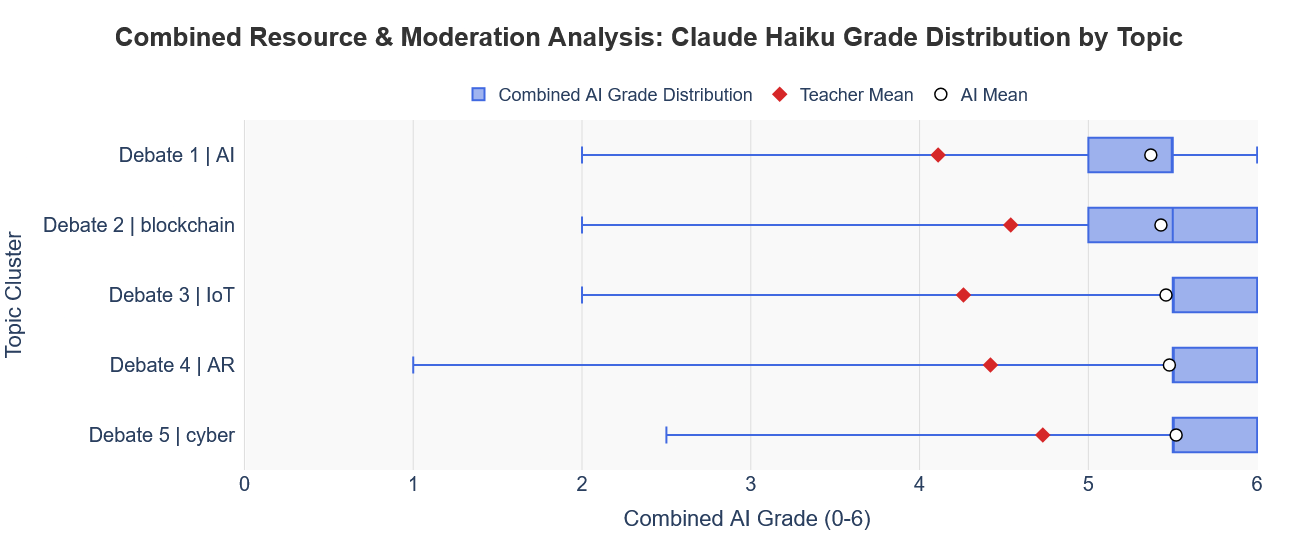

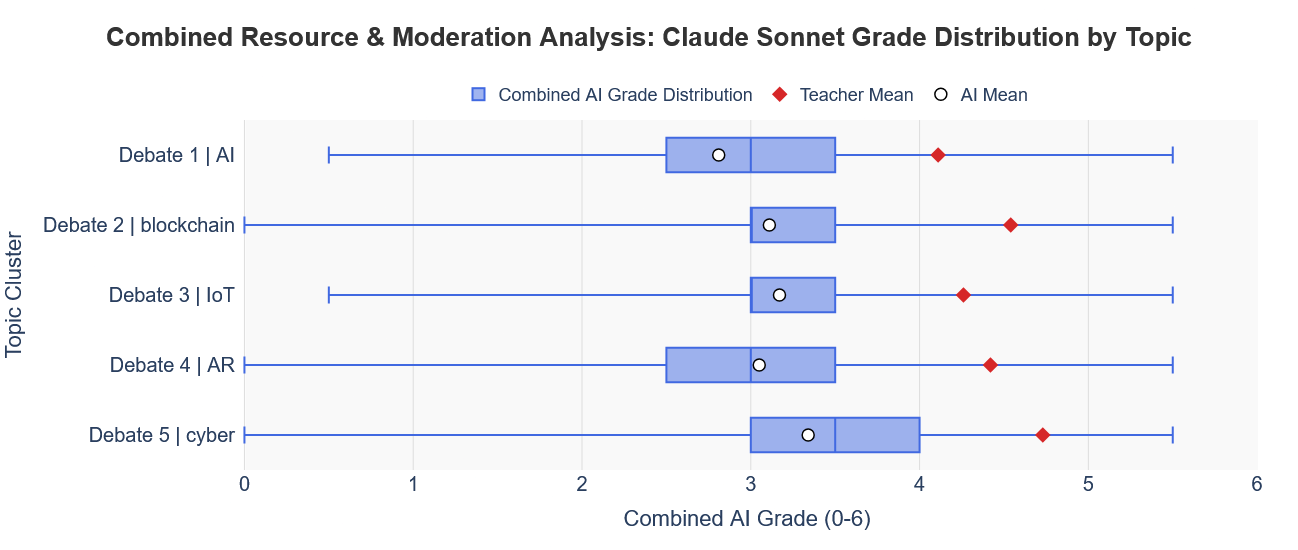

For this objective, our findings were less surprising. We ran two GenAI models using Anthropic Claude – Haiku and Sonnet – with the outputs from each shown in Figures 1 and 2. Claude Haiku is a much smaller, simpler model, while Claude Sonnet is more advanced. These GenAI models were loaded with the assignment rubric, a detailed set of instructions (see Appendices D and E) and the student submissions across each of the five topic clusters, representing just under 2000 items in total. A summary of the outputs across all five topic clusters was generated to show consolidated marks for the resources created and moderations submitted.

Figure 1 shows that the GenAI model tends to award full marks for resources created and near full marks for the moderations completed. It has very low distribution compared to the teacher-awarded values. There were multiple instances where the teacher awarded 0 marks for a student’s submission, while this model would reward close to full marks. From this data, Haiku seems to be an unreliable tool for marking assignments that required evidence of critical thinking.

See full image.

Figure 1 Marking as generated by Anthropic Claude Haiku, the fast and simple model using few tokens and therefore cheaper to run, showing consistently generous marking across the 5 cluster topics compared to human (teacher) marks, showing a compact spread of AI-generated grades (the shaded areas in blue with the average shown as a vertical dotted line)

See full image.

Figure 2 Marking across the 5 cluster topics as generated by Anthropic Claude Sonnet, the most advanced model released as at the time of the course, showing generally more critical marking then awarded by the teacher, although with a very broad spread of grades (the shaded areas in blue show the majority spread with the average shown as a vertical dotted line)

Using the same human-graded data across all students, the resources and moderations were then analysed by Claude Sonnet. Figure 2 shows a more critical marking output, and interestingly follows a binomial distribution, with the median value outputted as 3/6. This doesn’t have the issue of not being critical enough like Haiku, but rather the opposite. This suggests that the use of increasing complex and intelligent generative models will lead to more judicious and consistent results, although more work needs to be done to align more closely to the human-awarded grades.

The data can also be interpreted in terms of average marks awarded for each component, being the resources created, and moderations submitted, as compared to human marking, in table 1 below:

Table 1 Comparison of AI-generated marks to human-awarded marks (6 marks in total)

| Claude Haiku Resources (up to 3 marks available) |

Claude Sonnet Resources (up to 3 marks available) |

Claude Haiku Moderations (up to 3 marks available) |

Claude Sonnet Moderations (up to 3 marks available) |

|

|---|---|---|---|---|

|

Average Mark |

2.6 | 1.52 | 2.78 | 1.55 |

|

Average Difference* |

0.52 | -0.55 | 0.1 | -1.1 |

(* to human marking)

The more advanced GenAI model shows a closer alignment to human-awarded markings for moderations completed yet the simpler model performed closer to human marking for resources created. These differences highlight the underlying conundrum: that human marking is in fact a reliable and robust standard for GenAI models to benchmark. The ability for GenAI models to ‘learn’ the domain and ‘improve’ over time hasn’t been harnessed in this study, but is something considered in the future directions as a method to more closely align the AI with the teacher’s benchmark.

Limitations and Future Directions

While exploring new approaches to harnessing GenAI in the classroom, a number of limitations are apparent with this study. Firstly, the cohort represents a single class, albeit one that attracted students across many disciplines including business (54), information technology (2), biotechnology (5), commerce (5), communications (2), tourism (2), design (3) engineering (2), arts (3) and computer science (2)[6]. Gauging multiple classes across multiple disciplines and multiple institutions would strengthen the depth and utility of findings.

Secondly, while the survey responses indicate a generally low response rate, they may also reflect a self-reporting bias that serves to mask underlying usage patterns and attributions for critical thinking development. We did not draw correlations across various GenAI models so may have missed important nuances in how each model differently impacts critical thinking.

Conclusions and recommendations

The tension we sought to address is captured by this collected student quotation: “I’ve always understood concepts but sometimes struggle to find the ‘academic language’ to explain it in report form – using AI my work is more refined.” This reflection highlights that students do not necessarily need GenAI to supply understanding; rather, they need support in using tools to refine and extend their own ideas. It suggests that developing robust approaches to GenAI involves cultivating metacognitive awareness, helping students to recognise when GenAI is scaffolding their thinking productively versus when it risks becoming a shortcut to avoid critical thinking. In other words, the pedagogical challenge is not simply to teach students what GenAI can do, but to guide them in using it deliberately to enhance critical engagement, communication, and disciplinary reasoning. This orientation frames GenAI as a “bicycle for the mind,” where the value lies not in outsourcing intellectual effort but in amplifying the student’s own capacity to think, question, and articulate ideas with greater precision.

This data opens up several exciting avenues for future work to build a smarter and more expansive use of GenAI in the classroom. The grading experiments provide an indicative direction for developing more sophisticated and reliable GenAI-powered assessment tools.

1. Developing GenAI-Assisted Grading:

There are numerous variables that can be tweaked to further improve GenAI’s performance, such as the following:

-

- Prompt Engineering: Refining the system instructions given to GenAI to elicit more accurate and nuanced responses

- AI-Friendly Marking Criteria: Modifying rubrics to be more easily interpretable by GenAI models

- Few-Shot Examples: Providing a small number of examples of teachers marking so that it can then construct a desired marking style, allowing the model to “learn to learn”

- Trying Different Models: Evaluating the performance of new and emerging GenAI models which come out periodically

- Fine-tuning: Help teachers to modify the AI’s output so it is personalised to them

- Mark trajectories: compiling for students their sub-unit marks so they can better understand the detail of where they are meeting (and missing) the rubric criteria

- Students could ask for a re-mark from staff if they believe if their grade is unjustified or ambiguous, GenAI could then be trained against this human review outcome

2. Enhanced, Personalized Feedback for Students:

As introduced above, the students asking for specific, individual feedback for their resources and moderations represents a hurdle perhaps not humanly possible. It would involve the coordinator reading the resource that student created, reading each resource that student accessed to moderate, gauging the moderation crafted by that student in response to each of the three resources against the marking rubric. Then, as the course progresses, to do this for each cluster to advise the student on how they are – or are not – improving in their analytical skills as evidence by the assessment. Such a task string is simply not scalable!

However, adopting the ’bicycles for the mind’ metaphor, this would certainly appear to be a task where GenAI-tools could be very adept. By including all of each student’s previous submissions and the previous feedback given, the GenAI-tool can ‘learn’ to gauge improvements as well as hurdles facing each student, in the context of all other students in the cohort. Such a tool could then craft individual and measurable tasks to strengthen their performance, not only in terms of their marks but also in terms of their critical thinking as evidenced by their submitted works.

Our data shows that students are seeking to learn to use GenAI tools to enhance human judgment and craft authentic insights. They recognise that these tools can be shallow and even mis-leading, yet when properly harnessed offer learning gains – gains derived from thinking critically about the topic and not simply accepting whatever the GenAI model returns. This recognition is more sophisticated than critics might expect. As one student articulated: “I’m very wary about using GenAI in place of a human opinion or perspective, to me it’s a database more than a perspective.” Students demonstrate clear boundaries about AI use, with our data indicating 44% specifically avoiding GenAI for personal or emotional contexts, citing needs for “personal insights,” “feelings and emotions” and maintaining their authentic writing voice.

GenAI models offer immediate responses, just like Google, Wikipedia and other online tools. At best, these models allow students to explore different scenarios to refine and expand their thinking. At worst, such tools ‘shirk the work’. However, our evidence suggests students are naturally developing sophisticated usage patterns that maximize the former while minimizing the latter. Our digital innovation course is just the beginning, as we build on this data towards a thoughtful, courageous and transparent use of GenAI tools to bolster critical thinking across complex topics, to derive novel insights. Students themselves are pointing toward this integration model, with one suggesting an ideal balance of “AI – 40% and educator – 60%“. They consistently prefer GenAI for technical writing improvements, structure, and research efficiency, while reserving human feedback for subjective interpretation, context-dependent situations, and specialized knowledge. In short, we seek to harness GenAI as a ‘bicycle for the mind’.

The provocation of Moran and Wilkinson (2025) was extremely timely. It allowed us to probe more deeply in our AI-informed action research in a live classroom teaching course. We each learned ourselves about the value of these tools in the classroom (see our Learning Experience sections, below). It provoked us to engage with the nuances of GenAI usage and to appreciate that, in fact, students are wrestling with this new platform as are academics. Our experience gives us some stepping stones to address our fear as partners in learning, improving our critical thinking skills, aided by GenAI tools ……. our bicycles!

Researcher Reflections

The following reflections provide complementary perspectives, illustrating how the project unfolded in practice.

Learning Experience | Russell Manfield | Senior Lecturer, UQ Business School

Harnessing GenAI to think differently about innovation | a course coordinator’s reflection

I created the undergraduate course on digital innovation and entrepreneurship at UQ in 2022, designed to bring this lively domain into focus primarily for business students. During this first course delivery, OpenAI launched the first publicly available GenAI model ChatGPT that became a viral sensation and triggered a myriad of subsequent model releases.

During these early years, I used these GenAI models as a curiosity in the vein of “let’s see what they can do” to build student inquiry and awareness. I did NOT use them directly as a tool to drive critical thinking. By mid-2024, this ‘curiosity’ focus became untenable: if the truth doesn’t matter, then GenAI tools are fun, but if significant decisions ride on the insights and judgments of such explorations then these same tools need careful skills curation that simple ’play’ activities don’t foster. I realised that the capabilities of emerging GenAI tools needed to be directly harnessed into any curriculum addressing digital innovation. That realisation led to the 5-topic cluster structure of the course delivered in 2025, drawing on the array of research specialists I was able to access at UQ.

This reinvention of the course was underpinned by several grounding principles. Firstly, I wanted an explicit encouragement for GenAI usage so that we could all learn from each other. This was not a content delivery focus but rather a co-learning eco-system, where we could all experiment with different facets of the GenAI phenomenon and report it back to our community of inquiry. I was a co-learner in this community so sought to embrace all the sharing of others in that community. Secondly, I wanted categories within the digital innovation umbrella that were topical, that encouraged inquiry, that reflected discussions being held outside the hallowed precinct of a university that drew on the research expertise within our university. I am very grateful to the expertise shared largely from our UQ School of Electrical Engineering and Computer Science[7], underpinning the artificial intelligence, blockchain, cybersecurity and internet of things cluster topics. My colleague in the UQ Business School recommended the specialist on genomics through his health research[8]. Thirdly, I wanted to drive engagement with these topics so pioneered a multi-team exploratory debate structure where up to 20 students led exchanges and discussions on their chosen topic cluster. Prior to the first debate, I was genuinely nervous as to how it would work and whether students would actually learn from that novel format. As that first debate progressed, I became convinced this format served as a useful and engaging learning experience for students and teachers alike, pro-actively harnessing GenAI as a tool to drive the emergence of critical thinking.

There was a lot happening in this classroom as this action learning trajectory emerged from these three grounding principles. I very much valued the insights of my course facilitator, Yumeng Wu, which allowed the smooth running of each class and assessment item, I am very grateful to my two student research partners, whose experiences appear below, with each bringing a depth of access and understanding to the student experience that otherwise would have remained obscure, or perhaps totally hidden.

Learning Experience | Alice Hawkesby | Bachelor of Biotechnology student, UQ

Navigating Peer Perspectives on GenAI and Critical Thinking | a student researcher’s reflection

As a student researcher, I acted as an intermediary between my peers and the research process, creating a more relaxed and confidential space for students to share their experiences and concerns around GenAI use in the TIMS2302 digital innovation cohort. This peer-to-peer dynamic encouraged more open conversations, as students felt less pressure about potential academic consequences.

To explore the potential of GenAI for enhancing critical thinking, I used a mixed-methods approach. I began with confidential written surveys to gather broad trends, followed by qualitative interviews to dive deeper into students’ attitudes and behaviours. Toward the end of the course, I selected five trusted individuals to engage in more in-depth discussions about their use of GenAI in academic coursework. My research was informed by current literature on GenAI in education, as well as regular consultation with Dr. Manfield. I compiled and analysed the data using Excel, employing comparative analysis and descriptive statistics to identify recurring patterns. A notable limitation of the study was its reliance on self-reported data, which can be subject to bias or omission.

As a student myself, I’m acutely aware of how GenAI is used in academic settings. I also rely on GenAI platforms – perhaps more than I would openly admit – and my observations suggest this is common among my peers as well. Yet, despite the growing presence of GenAI in our academic lives, I encountered consistent resistance when trying to probe its impact on critical thinking. Many students were hesitant to speak openly about their use of GenAI, expressing fear or guilt. From my experience. GenAI is often discussed with negative connotations, and universities have contributed to this by framing its use as academically dishonest or inauthentic. Even in this course, where GenAI usage was openly encouraged, there was still a prevailing reluctance to disclose its use.

Several students initially denied using GenAI to complete their assignments yet later confided during interviews that they had relied entirely on GenAI platforms, simply choosing to conceal it. This duality suggests a disconnect between institutional policies and actual student behaviour. I question the effectiveness of discouraging GenAI use when universities currently lack the means to regulate or detect it sufficiently. To test the assumption that GenAI guarantees high academic performance, I conducted a personal experiment. I submitted an assignment generated entirely by ChatGPT, using all relevant criteria and guidelines, and presented it to Dr. Manfield, who was unaware of the experiment or grading. The resulting mark was 75%, notably lower than the grades I typically received with minimal GenAI input. This outcome challenged my assumptions and raised important questions about the effectiveness of GenAI in replacing human effort.

Initially, I believed GenAI to be a shortcut tool for students seeking to avoid critical thinking. My perspective has evolved. I now view GenAI as a tool that, with thoughtful use, has the potential to enhance, rather than replace, critical thinking.

Learning Experience | Nicolas Nucifora | Bachelor of Computer Science student (since graduated), UQ

Creating GenAI tools to encourage critical thinking | a student researcher’s exploration

As a student researcher for this project, I oversaw creating the GenAI marking assistant and evaluating it against human-marked grades for the class’ RiPPLE[9] activities. For years, the future of AI in education has felt like a distant concept, but with the release of ChatGPT, its quickly become an immediate reality. This project provided me a crucial opportunity to potentially help shine a light on the new opportunities this new technology brings. My aim was to test if this powerful technology could be used responsibly to support, and perhaps even enhance, our learning environment.

The project started poorly. The dataset had systemic bugs I couldn’t fix without losing key information, and my initial test—run on a very basic GenAI model—gave full marks to most of my peers. Switching to a smarter model transformed these results; it graded more critically, and the marks followed a binomial distribution, similar to human-graded results. Given that GenAI outputs are probabilistic by nature and aren’t always consistent or correct, I wasn’t sure how it would appear, so this was a pleasant surprise. That said, limitations were obvious. Due to the bugs, we lacked access to critical human-graded data, and the model’s response still didn’t align closely with human-graded marks. This shows that GenAI feedback still has a way to go, but these issues are likely resolvable with the future directions we have identified.

The key insight for me wasn’t the AI’s marking ability but rather realising how AI could be used to fill gaps in feedback. For these RiPPLE assignments, students made four weekly submissions but only received a single mark with no comments. Personally, going through these activities without feedback, I didn’t strive to improve because I had no direction on what I was doing right or wrong. As a result, my critical thinking skills didn’t grow; I simply relied on the skills I already had. With a large cohort and just two staff, this detailed feedback was never feasible. Adding more tutors wouldn’t be practical, and reducing the frequency of assignments would have removed what I loved most about the course. GenAI could maintain these high-engagement structures by providing personalised feedback at scale, freeing teachers to consider more engaging syllabuses for their classes which would be otherwise impossible.

This project put me in a unique position: a student in the course who was also building the tool that might one day grade it. This dual perspective showed me that the real gain isn’t just consistent marking; it’s about shaping how students interact with GenAI. I know from experience that when students are left to figure out GenAI on their own, they often follow the path of least resistance, using it as a shortcut rather than a learning aid. By integrating systems that challenge us—like a GenAI that provides constructive feedback instead of easy answers—we can model productive uses. This experience reinforced that the next generation won’t benefit from pretending GenAI doesn’t exist. They’ll benefit from structured opportunities to explore its potential, understand its limits, and apply it in ways that genuinely develop their abilities.

References

Ajjan, H., Potkalitsky, N., Mucharraz y Cano, Y., McVey, C., & Acar, O. (2025, August 21). 5 sample classroom AI policies. Harvard Business Insights. https://hbsp.harvard.edu/inspiring-minds/sample-classroom-ai-policies

Ballantyne, R., Hughes, K., & Mylonas, A. (2002). Developing procedures for implementing peer assessment in large classes using an action research process. Assessment and Evaluation in Higher Education, 27(5), 427–441. https://doi.org/10.1080/0260293022000009302

Gerlich, M. (2025). AI Tools in Society: Impacts on cognitive offloading and the future of critical thinking. Societies, 15(1), 6. https://doi.org/10.3390/soc15010006

Lazar, M., Lifshitz, H., Ayoubi, C., & Emuna, H. (2025). Would Archimedes Shout “Eureka” with Algorithms? The Hidden Hand of Algorithmic Design in Idea Generation, the Creation of Ideation Bubbles, and How Experts Can Burst Them. Academy of Management Journal, 68(5), 881-906. https://doi.org/10.5465/amj.2023.1307

Moran, A., & Wilkinson, B. (2025, March 2). Students’ use of AI spells death knell for critical thinking. The Guardian. https://www.theguardian.com/technology/2025/mar/02/students-use-of-ai-spells-death-knell-for-critical-thinking

Potkalitsky, N. (2025, July 7). AI should push, not replace, students’ thinking: 3 human skills to cultivate in class. Harvard Business Insights. https://hbsp.harvard.edu/inspiring-minds/ai-student-thinking-skills

Singh, R., Miller, T., Sonenberg, L., Velloso, E., Vetere, F., & Howe, P. (2025). An actionability assessment tool for enhancing algorithmic recourse in explainable AI. IEEE Transactions on Human-Machine Systems, 55(4), 519–528. https://doi.org/10.1109/THMS.2025.3582285

Wilson, S. S. (1973). Bicycle technology, Scientific American, 228(3), 81-91.

- The project formed part of a UQ Teaching Innovation Grant with ethics approval 2024/HE001346 ↵

- From a class of 88 students, each survey attracted the following responses: for phase 1 | n = 25; for phase 2 | n = 30; for phase 3 | n = 30; for phase 4 | n = 5 where students were invited for interview after showing enthusiastic engagement with GenAI usage following the initial 3 survey phases ↵

- 73 students completed 3 moderations each for the first topic cluster, from which only 3 students were transparent in their GenAI usage. For the final topic cluster, 84 students completed 3 moderations each, with 34 students sharing how they used GenAI tools to frame their understanding. ↵

- Anthropic supports an application programming interface (API), to allow structured queries for a data array. ↵

- This exercise was a trial – future iterations of this approach will seek to adopt open-source models that can run locally, so that absolutely no data leaves our institution. ↵

- The balance of the class comprised international exchange students across a range of disciplines ↵

- I am particularly grateful for Prof Tim Miller, Professor in AI at UQ EECS, who’s initial enthusiasm validated my approach and served to attract other faculty members to serve as guest presenters in their areas of specialisation. ↵

- I am grateful for the enthusiasm of Prof Andrew Burton-Jones of UQ Business School for his referral. ↵

- RiPPLE is a proprietary UQ platform licenced across several universities that allows students to create resources that are then moderated by other members of the class. For this course, each student was required to create one resource after each multi-team debate and to moderate three others, thereby representing a circular co-learning environment. ↵