2 From Anxiety to Agency: A Case Study in Co-Designing an Ethical AI Guide for Science Students

Nantana Taptamat; Marnie Holt; Dominic McGrath; Tiarna McElligott; Lauren Miller; and Hana Purwanto

How to cite this chapter:

Taptamat, N., Holt, M., McGrath, D., McElligott, T., Miller, L., & Purwanto, H. (2025). From anxiety to agency: A case study in co-designing an ethical AI guide for science students. In R. Fitzgerald (Ed.), Inquiry in action: Using AI to reimagine learning and teaching. The University of Queensland. https://doi.org/10.14264/74c5f7e

Abstract

As generative AI (GenAI) becomes embedded in academia, students often face a mixture of curiosity and anxiety, caught between the potential of new tools and the fear of academic misconduct. This chapter addresses the critical challenge of how students’ uncertainty about AI use creates barriers to deep learning, authentic assessment, and the development of scientific reasoning skills in higher education. In this chapter, we also detail the development of the Science Generative AI Essential Guide, an open educational resource at the University of Queensland designed to address this challenge. Grounded in principles of self-regulated learning and Multimedia Learning theory, we share the story of our iterative, evidence-based process, moving from identifying student needs to co-designing a practical, multimodal module with student partners. Student partners played a central role in shaping module content, evaluating accessibility, and co-creating ethical AI scenarios. The case study highlights how grounding our intervention in the real-world tensions of students’ experience, particularly around assessment integrity, was key to building their confidence and sense of agency. We present our key takeaways and design principles, offering a practical model for educators seeking to move beyond policy and proactively support students in the ethical use of AI. The chapter includes a teaching team reflection, insights from our student partners, and an example of an ethical scenario that can be adapted for classroom use.

Keywords

Generative artificial intelligence, academic integrity, science learning, student centred design, staff-student partnerships, AI literacy, self-regulated learning, cognitive load

Practitioner Notes

- Treat students as partners from scoping to review. Their lived experience surfaces real risks and makes resources credible among peers.

- Do not leave the guide as optional reading. Reference it in class, tutorials, and assessment briefings, and assess its use with short, applied tasks.

- Prompt forethought, monitoring, and reflection around AI use. Ask students to justify when and how AI supported learning and where it might have bypassed it.

- Prefer short videos, interactive checks, and concise tables over dense text. Sequence content from simple to complex with scenario practice throughout.

- Track shifts in student confidence, quality of evaluations of AI outputs, and correct disclosure and referencing of AI use, then iterate.

Background

This case study is situated within The University of Queensland (UQ), a large research-intensive institution in Australia committed to fostering “accomplished scholars and courageous thinkers.” The arrival of ChatGPT in late 2022 sent shockwaves through higher education globally, and UQ was no exception. In 2023–2025, one of the most urgent challenges facing both students and educators at UQ has been the integration of generative artificial intelligence (GenAI) tools, such as ChatGPT, into teaching, learning, and assessment.

Beyond the surface-level concerns about academic integrity, we identified a deeper pedagogical problem: students’ uncertainty about appropriate AI use was creating significant barriers to learning. Specifically, this uncertainty:

- Being unresolved and associated with fear impeding learning (Lodge et al., 2018) and the development of trust between teachers and students.

- Inhibited the development of critical evaluation skills essential for scientific practice.

- Created inequitable learning conditions where confident students experimented with AI while anxious students avoided potentially beneficial tools.

- Prevented students from developing AI literacy as a core 21st-century competency.

While these tools offer potential benefits in terms of personalisation and efficiency, they also raise significant ethical and pedagogical questions. Students often express uncertainty about how to use GenAI responsibly without violating academic integrity, a tension that reflects broader concerns about the challenges GenAI poses to assessment and student learning across the sector (McDonald et al., 2025). Educators, meanwhile, face growing pressure to adapt their teaching to ensure fairness and transparency. The underlying pedagogical challenge was that this uncertainty undermined students’ confidence and risked encouraging shallow engagement strategies (e.g. avoiding AI altogether, hiding AI use or using it without reflection). Our co-design process therefore aimed to close this gap by scaffolding ethical, contextualised, and confidence-building approaches to AI use. This mirrors national guidelines in Australia, which now challenge institutions and educators to take shared responsibility for building AI literacy directly into the curriculum (TEQSA, 2024).

In response, the Faculty of Science at UQ developed the Generative AI Essential Guide, an open, multimodal educational resource aimed at supporting students in understanding how to use GenAI ethically in their studies. This project was grounded in a Scholarship of Teaching and Learning (SoTL) approach: it drew directly on student voice, institutional policy, and theoretical frameworks to inform iterative module design. Through surveys, student–staff partnerships, and classroom piloting, the development of this guide highlights a teaching-as-inquiry model that responds to authentic student concerns. The case illustrates how SoTL can address pressing pedagogical challenges by translating abstract policy into inclusive, practical learning experiences.

Exposure and Student Understanding of Generative AI

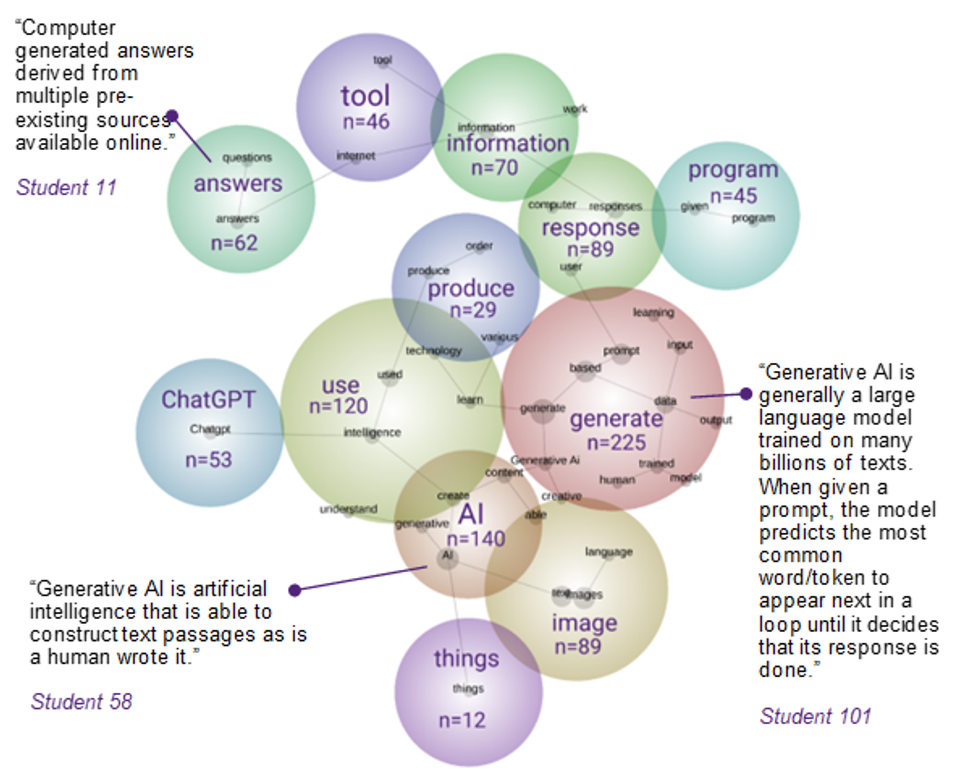

Data from internal 2023 – 2024 science student surveys indicate that understandings of GenAI are varied and complex. Students predominantly associate GenAI with tools such as ChatGPT that generate textual responses to prompts. Many responses highlight the capability of GenAI to produce not only text but also images, music, and various forms of media. The overarching theme is the recognition of GenAI as a powerful tool that leverages vast datasets to create original outputs derived from existing patterns in the training data. The Leximancer concept map suggests that the understanding of Generative AI among students is multi-dimensional but centres around a few core concepts. “AI” and “generate” are at the heart of the conversation, with “use” being the most commonly associated action. The term “ChatGPT” has emerged as a significant node, indicative of students’ familiarity with specific AI tools.

Figure 1 Leximancer analysis of Semester 2, 2023 student definition of GenAI.

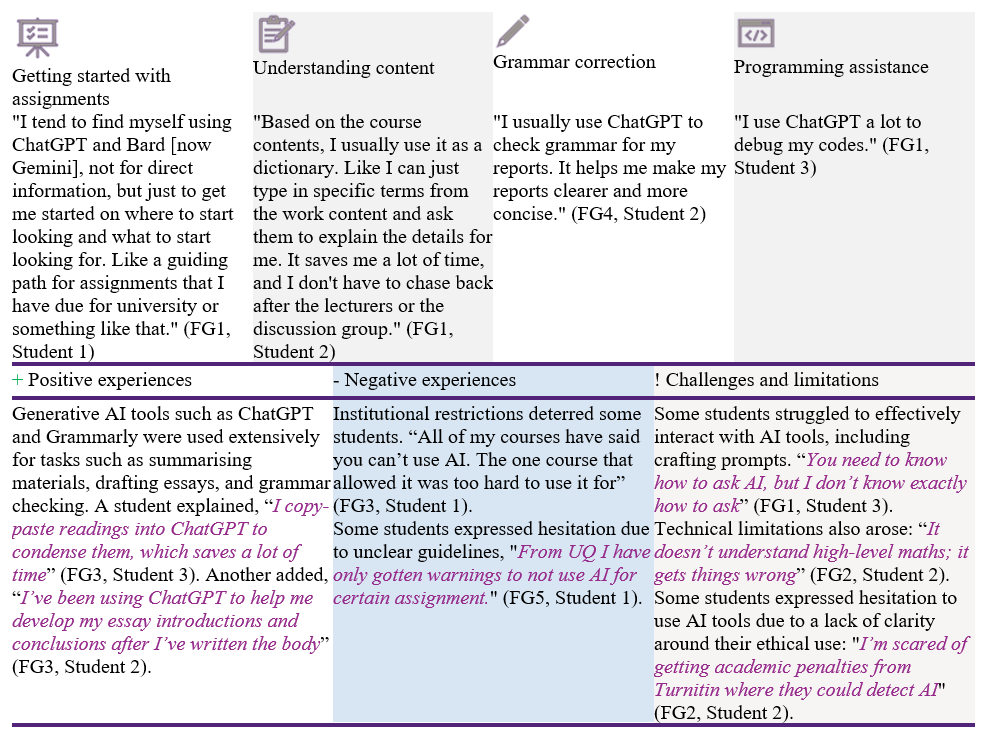

Similarly, in semester 2, 2024, students described diverse use of generative AI tools in their academic work. Common uses are summarised in Table 1.

Table 1 Student Perceptions of Generative AI Tools: Uses, Benefits, and Challenges

In summary, students predominantly use generative AI tools for text-based tasks like summarising and grammar correction. However, institutional barriers, prompt-crafting difficulties, and unclear ethical guidelines hinder their ability to fully leverage these tools. Clearer policies and training are needed to address these challenges effectively.

Theoretical Framework

Our approach to addressing this challenge was informed by two key learning theories, Self-Regulated Learning (SRL) and Multimedia Learning Theory (Mayer, 2020).

Self-Regulated Learning (SRL)

Zimmerman’s (2002) model of self-regulated learning emphasised cyclical processes of forethought, performance, and self-reflection. Broadbent, Panadero, Lodge, and de Barba (2019) extend this perspective, noting that in higher education, SRL also requires the regulation of cognition, motivation, behaviour, and emotion, and that digital environments both enable and complicate this process.

In the context of generative AI, students must adapt traditional SRL strategies to new challenges. Effective use of AI requires:

- Making metacognitive judgments about when AI assistance supports rather than undermines learning.

- Setting clear goals for each AI interaction (e.g., deepening understanding versus accelerating task completion).

- Monitoring reliance on AI tools and critically evaluating outputs to prevent overdependence.

While AI can scaffold learning processes, Broadbent et al. (2019) emphasise that the responsibility for regulation ultimately lies with students. For educators, the implication is to design activities that explicitly prompt forethought, monitoring, and reflection, ensuring that GenAI use becomes a catalyst for deeper learning rather than a substitute for it.

Multimedia Learning Theory

Following Mayer’s (2020) principles, we designed our intervention to segment content, personalise pathways, and integrate interactive elements to reduce cognitive load and sustain engagement. Central to this design were scenario-based activities that encouraged students to practise ethical reasoning and transfer judgment skills into their studies.

The Challenge: “Am I Allowed to Use This?”

Since 2023, a growing sense of uncertainty has spread across university campuses. GenAI had rapidly entered the academic world, and while students were curious and increasingly engaged with these emerging tools, many were also deeply anxious. Our finding is consistent with studies highlighting widespread student concerns around academic integrity and over-reliance on these new tools (Wang et al., 2024). Throughout this period, one question consistently surfaced in our conversations with students, both implicitly and explicitly, am I allowed to use this? What appeared to be a simple question revealed a multifaceted set of concerns, including fears of unintentionally committing academic misconduct, confusion over inconsistent course guidelines, and uncertainty about where the boundary lay between legitimate assistance and academic dishonesty. More critically, this uncertainty was actively impeding learning. Students reported:

- Avoiding potentially beneficial AI tools entirely, missing opportunities for enhanced learning.

- Spending excessive mental energy worrying about compliance rather than focusing on learning.

- Developing surface-level approaches to assignments to avoid any risk of misconduct.

- Experiencing increased stress that negatively impacted their overall academic performance.

Our initial research confirmed these findings. A survey conducted in Semester 2, 2023 revealed that although nearly 74.5% of 322 science students had experience using GenAI, a significant majority expressed discomfort or uncertainty about its appropriate use (SLOCI, 2023). This concern was further supported by research from Turnitin, which found that 64% of students reported worry about AI use, exceeding the 50% of educators who expressed similar concerns. This feeling of uncertainty persisted into Semester 2, 2024, as students continued to raise it during focus group discussions. As one student shared,

“I’m quite worried about academic integrity. It would be good to have guidelines on how I can use it for assignments without getting in trouble.”

(FG4, Student 1)

Similar expressions were echoed widely across both the St Lucia and Gatton campuses. Our data revealed that students were navigating an unclear and evolving space on their own, often turning to peers, online forums, or social media for advice in the absence of consistent and accessible institutional guidance.

It became clear that policy alone would not be enough. What was needed was a shift from rule enforcement to student empowerment. Our goal emerged with clarity: to design an educational resource that would:

- Provide clear, actionable guidance.

- Support the development of metacognitive skills for ethical AI use.

- Foster critical evaluation capabilities essential for scientific practice.

- Build student confidence in making informed judgments about AI integration.

- Develop AI literacy as a transferable professional competency.

This goal aligns with the progression levels of UNESCO’s AI Competency Frameworks, including enabling students to transition from foundational understanding to practical application (Cukurova & Miao, 2024; Miao & Shiohira, 2024).

Our Approach: Listening First, Building Second

From the outset, our design philosophy was guided by three core principles: to be student-centred, evidence-based, and genuinely collaborative. We recognised that while educators bring pedagogical expertise, students possess invaluable knowledge about their lived experiences, digital practices, and the emotional dimensions of learning with AI. This complementary expertise made genuine partnership essential.

To achieve this, we used a methodology known in educational research as Design-Based Research (DBR). In simple terms, this meant we worked in an iterative cycle of design, feedback, and refinement. We would create the resource, test it with students and staff, gather their feedback, and then use that input to make it better (Wang & Hannafin, 2005). This process ensured that our project remained grounded in the authentic needs of our community rather than our own assumptions about what they needed.

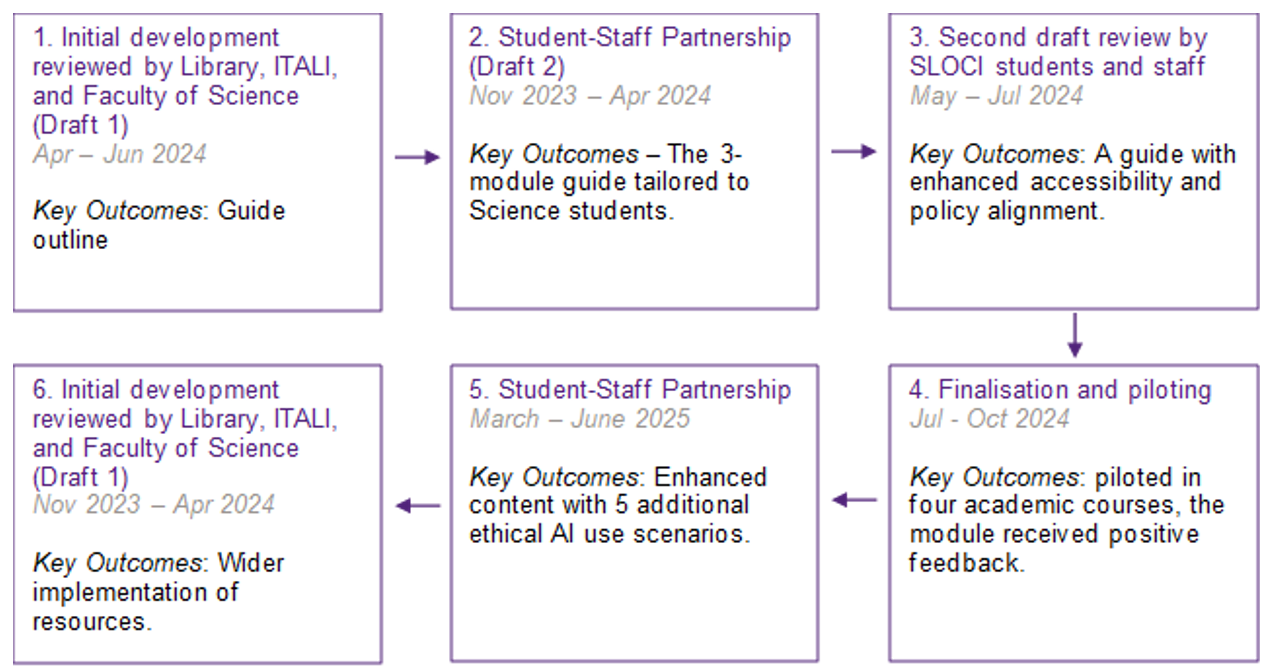

Figure 2 Key steps of our guide development process

The Power of Student Partnership

A critical part of our collaborative approach was our Student–Staff Partnership (SSP). In Semester 1, 2024, we partnered with four students who acted as co-designers, reviewers, and our most valuable critics of the Guide. In Semester 1, 2025, we continued this collaboration with a new SSP team, including three returning students, who specifically worked on development of five ethical AI use scenarios. Additionally, Science Student Led Observation for Course Improvements (SLOCI) students collected diverse students’ perspectives on GenAI use as well as on our Guide throughout 2023- 2025. These student partners brought essential expertise about:

- The lived experience of navigating AI uncertainty in their courses.

- Their digital literacy practices and preferences.

- Emotional and social dimensions of AI use among peers.

- Practical insights into what makes educational resources engaging and accessible in their studies.

Ethical and inclusive principles were central to this process. We drew on principles for effective partnership (Matthews 2017) as we interpreted guidance about ethical AI use together. Ethical practice was enacted through scenarios that modelled transparency, fairness, and academic integrity. Inclusion was built into the design by involving undergraduate and postgraduate students from multiple science disciplines, ensuring diverse perspectives. Multimodal design choices (e.g., combining video, text, and interactive elements) also supported accessibility and engagement for students with varied learning needs.

The diversity of the student team enriched our design process significantly. As one student partner reflected:

“This co-design partnership integrated diverse perspectives from students and staff across different learning and professional backgrounds within the Faculty of Science. The student team included both undergraduate students from biomedical sciences and biology, as well as postgraduate students from occupational health and safety and physics. This diversity created opportunities to learn from each other’s experiences across different disciplines.”

(Student Partner Reflection, 2025)

Their influence was crucial. For example, our early drafts of the module were text-heavy and theoretical. Student partners were candid in their feedback, noting that students would likely lose interest. This aligns with research showing that overly long or unfocused computer-based training modules can reduce learner motivation and limit learning outcomes (Peterson & McCleery, 2014). Their input prompted a shift away from dense paragraphs towards interactive scenarios, short videos, and practical examples, elements that became the most highly rated features of the module.

The student partners’ approach was both systematic and creative. As one partner described:

“The development followed an iterative approach, beginning with an initial design plan that was refined through feedback incorporating student experiences and course requirements. Throughout the process, we consulted with teaching and learning design staff and fellow students to gather user feedback and ensure the resource met its intended purpose.”

(Student Partner Reflection, 2025)

This iterative process led to a more effective guide, such as developing

“a concise, readable table format for levels of GenAI use that presented guidelines targeted for specific use cases“,

(Student Partner Reflection, 2025)

a direct response to student needs through to co-design for clarity and accessibility.

The collaboration was also beneficial for the student partners themselves. One partner emphasised that

“the most meaningful aspect of this involvement was the valuable networking opportunities it provided, which opened doors to working with staff in other capacities, including research assistant positions.”

(Student Partner Reflection, 2025)

Another student partner noted:

“This project provided a meaningful opportunity to express my needs and expectations regarding GenAI-related resources and guidance, which directly informed the production of a tool that could specifically met those needs. Moreover, being embedded in the development process offered me valuable insight into the complexities and challenges involved in designing educational resources, including ethical guidelines, which deepened my appreciation for the nuances of the process and taught me valuable lessons in content creation, information design and data collection.”

(Student Partner Reflection, 2025)

The importance of genuine student partnership cannot be overstated. As one partner articulated:

“Student involvement as partners in creating educational resources ensures that materials developed for students actually meet student needs.”

(Student Partner Reflection, 2025)

This insight captures why our collaborative approach was essential, not just for creating a better resource, but for modelling the very principles of ethical engagement and shared agency that we sought to teach.

The Intervention: The Generative AI Essential Guide

Defining Our Ethical Framework

Before describing the guide’s structure, it was important to articulate what we meant by “ethical” and “inclusive” AI use in the context of science education. Our framework was informed by national and international guidelines, including the Australian Government AI Ethics Principles (2020), the UNESCO Recommendation on the Ethics of Artificial Intelligence (2021), and UQ’s institutional policies on academic integrity and accessibility. These provided a foundation which we then adapted through student–staff partnership to the specific needs of science students.

Ethical AI use in science education includes:

- Academic Integrity: Ensuring AI is used in ways that preserve the authenticity of students’ own learning and assessment processes (aligned with UQ Academic Integrity policy).

- Transparency: Encouraging students to openly disclose when and how they use AI tools, consistent with principles of explainability in national framework.

- Critical Evaluation: Cultivating skepticism about AI outputs and fostering habits of verification and cross-checking, consistent with established approaches to metacognition and self-regulated learning (Zimmerman, 2002; Broadbent et al., 2019).

- Learning Enhancement: Positioning AI as a support for deeper understanding rather than a shortcut that bypasses key disciplinary processes.

- Equity: Ensuring AI use does not exacerbate existing inequities, reflecting UNESCO’s emphasis on inclusive and human-centred AI.

The Inclusive design principles we followed included:

- Multimodal Delivery: Offering text, video, and interactive activities to support varied learning preferences (Mayer, 2020).

- Cultural Sensitivity: Recognising that students’ digital practices and attitudes toward technology are shaped by diverse cultural contexts.

- Accessibility: Ensuring all resources met WCAG 2.1 AA standards, aligned with UQ accessibility guidelines.

- Reduced Barriers: Avoiding unnecessary jargon, scaffolding complex ideas, and making AI concepts approachable for all students.

The result of our collaborative process was the Generative AI Essential Guide: Unlocking Learning Sciences, an open-access, multimodal online module. The module includes scenarios, videos, and interactive elements designed to make ethical practice clear and practical. In line with principles of scaffolding, the scenarios progressed from basic applications (e.g., grammar correction) to more complex tasks (e.g., using AI to critique arguments). The design also drew on cognitive load theory, presenting content in short videos and concise tables to reduce overwhelm, and on metacognitive approaches that prompted students to reflect on when AI supported or hindered their understanding. We structured the guide to take students on a journey from basic understanding to confident application:

- Module 1: Introduction to GenAI, covering the fundamentals: what these tools are, their capabilities, and their limitations based on technical and ethics.

- Module 2: Responsible Use of GenAI: Addressed ethics head-on, providing checklists and clear guidance on how to use AI in line with university academic integrity policies.

- Module 3: Practical Application of GenAI: Offered concrete, discipline-specific examples of how to use AI to support learning, including exampled use in some assessment tasks and career development.

To make the ethical considerations tangible, we developed a series of interactive scenarios based on the very tensions our research had uncovered in Semester 2, 2024.

Example: The Study & Exam Preparation Scenario

Alex, a science student, is preparing for the final CHEM1200 exam, a 40% centrally scheduled, paper-based hurdle exam. The course explicitly prohibits AI use during the exam but does not specify whether AI tools can be used to support study beforehand. Like many students, Alex wonders, “Can I use AI to study without crossing a line?”

To navigate this, Alex experiments with several GenAI tools. For instance, they use Claude to generate multiple-choice questions for self-testing:

Prompt: “Give me five multiple-choice questions to test my understanding of transition metals as taught in CHEM1200 at UQ.”

After answering, Alex checks responses critically and notes areas needing review. Rather than accepting feedback passively, Alex re-visits lecture notes to confirm explanations. This active engagement illustrates responsible use; AI supports study but does not replace effort.

Alex also uses ChatGPT to explain difficult chemistry concepts, asking:

Prompt: “Explain the concept of NMR Spectroscopy in simple terms, with an example.”

This prompt scaffolds understanding without offering a shortcut.

In another case, Alex prompts ChatGPT to act as a tutor:

Prompt: “Act as a Socratic tutor to help me understand acids and bases as taught in UQ’s CHEM1200.”

This helps Alex refine conceptual understanding through critical dialogue, mirroring traditional tutoring in an ethical way.

The scenario prompts students to consider the risks and opportunities in each use case:

- Risks: Over-reliance, potential copyright breaches, AI hallucinations, and lack of critical engagement.

- Opportunities: Personalised learning, formative feedback, spaced repetition (e.g., Anki flashcards), and time-efficient revision through summaries or podcasts.

A critical decision point is included:

Is it ethical to use AI to generate answers to past exam papers from the UQ library?

The scenario guides students to realise that uploading copyrighted material is inappropriate. However, using AI to practise responses (without uploading content) can be ethical, if answers are verified and AI is treated as a study partner, not a solution machine. This scenario explicitly addresses the tension between efficiency and deep learning. Through guided reflection, students consider:

- When AI summarisation supports learning (e.g., initial orientation to complex topics) versus when it might bypass important cognitive processes.

- How to use AI as a dialogue partner for deeper understanding rather than a shortcut.

- The importance of verifying AI outputs against authoritative sources.

- Strategies for maintaining active engagement when using AI tools.

This scenario, co-developed with student partners, models how ethical engagement with GenAI can support deeper learning. It reinforces our principle, Use AI to learn, not to shortcut learning. Through scenario-based reflection, students learn to evaluate AI’s role in their study practice critically, reducing anxiety and promoting academic integrity.

Did It Work? Shifts in Student Confidence and Understanding

We piloted the module with 235 students across four science courses, and the evaluation results were highly encouraging. Overall, 80% of students surveyed agreed or strongly agreed that the module was valuable for understanding how to integrate GenAI into their studies ethically.

Module 3, which explored practical applications of generative AI, was also well-received, with respondents valuing the hands-on activities and real-world scenarios. One student noted,

“The hands-on examples helped clarify how AI could be used effectively and ethically in academic work.“

(Student Feedback, Sem 1, 2025)

This comment is supported by one SLOCI’s student module review:

“Students say that they don’t use GenAI because they do not know about it, what it is, or how it can be used. This course informs students and advises them of how GenAI can be used and integrated into university (both study and assessment). This content is beneficial and suitable for Students.”

(SLOCI student module review)

Most importantly, we saw a clear shift from anxiety to confidence. After completing the module, 60% of students reported feeling “confident” or “strongly confident” in applying GenAI tools to their studies—a significant increase. Students’ qualitative feedback highlighted the value of the clear, practical guidance. As one student noted,

“[The module] helps to clear the procedure for new GenAI technology and use of it in a study space and shows how it can be used to help learning in an ethical manner.“

Another praised the multimodal approach:

“The content was given in different ways…and therefore I was entertained, and it kept me interested.”

Despite these successes, several areas for improvement were identified. While most students found the course easy to navigate, some felt that the structure could be simplified. One respondent commented,

“For me, it was too much text content and long videos for it to be fully engaging.”

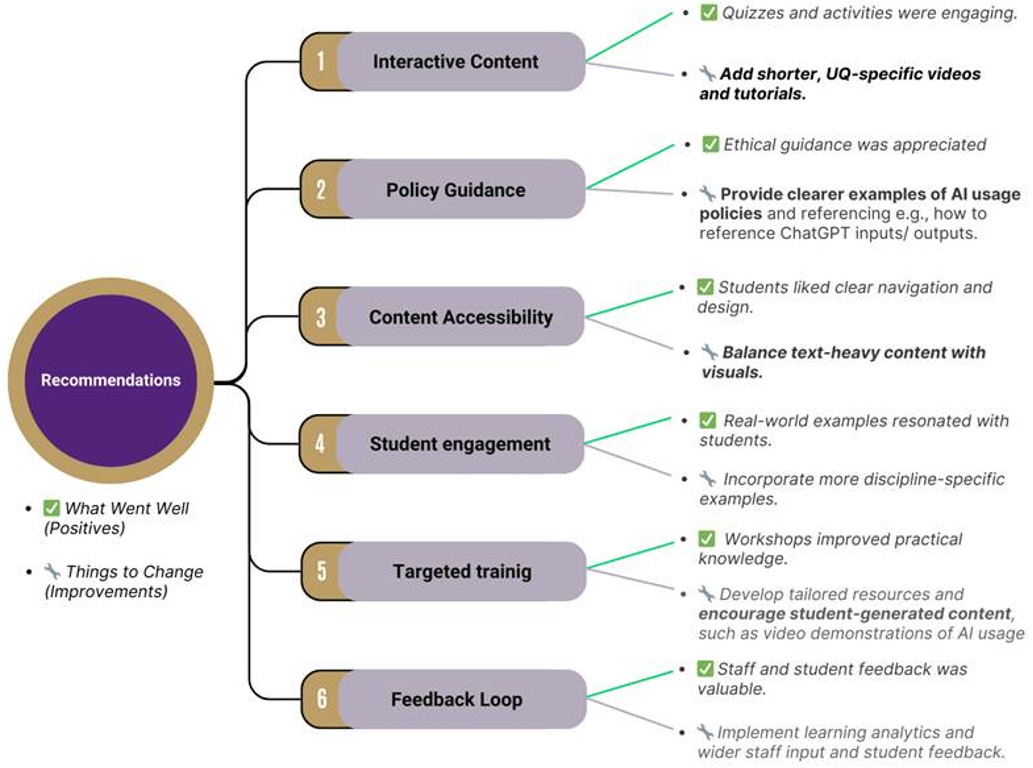

Students also requested shorter, more dynamic videos to enhance engagement, and feedback indicated that text-heavy sections, particularly in Module 1, could be condensed to improve accessibility. Additionally, some participants highlighted the need for clearer guidance on referencing AI-generated content and understanding academic integrity policies. The following figure summarises the key recommendations for future iterations of the module based on module implementation in Semester 2, 2024, highlighting both what worked well and what could be improved based on student and staff feedback.

Figure 3 Recommendations for future iterations of the Generative AI guide, highlighting strengths (What Went Well) and areas for improvement (Things to Change).

In response to these findings, in Semester 1, 2025, we had created shorter videos, reduced text-based content, and incorporated more interactive elements such as quizzes and scenario-based activities. We also provided clearer, step-by-step examples for referencing AI-generated work that aimed to address a key concern raised by students. The pilot testing demonstrated the module’s potential to build students’ confidence and skills in using generative AI responsibly, and with these adjustments, it can become a useful learning resource. We have implemented our revised guide in Semester 2, 2025.

Our Reflections: What We Learned Along the Way

As a project team, our biggest realisation was that the GenAI challenge was not simply technological; it was deeply human. We initially approached the project focused on tools, features, and functionality. But what surfaced most clearly was the emotional weight students carried in the absence of clear guidance. Many expressed a genuine fear of doing the wrong thing by accident, which led to:

- Risk-averse learning behaviours that limited exploration and creativity.

- Surface approaches to assignments designed to avoid any AI-related risks.

- Missed opportunities for developing critical AI literacy skills.

This reframed our purpose: from merely delivering information to cultivating psychological safety. We learned that when integrating disruptive technologies, our first responsibility as educators is to listen to students’ concerns and build a bridge of trust and clarity that supports ethical learning while maintaining academic rigor. A critical insight emerged around the balance between AI assistance and cognitive engagement. We observed that students needed explicit guidance on:

- Distinguishing between AI use that scaffolds learning versus use that bypasses it.

- Developing personal criteria for appropriate AI assistance.

- Building habits of critical evaluation and verification.

- Maintaining ownership of their learning process.

We recognised that guidance at the institutional level needed to work for all disciplines; however, this also meant that the guidance had to be abstracted from practice. Initially, we hoped that focusing within science would provide sufficient context to ground guidance on using AI. Yet even across science disciplines, practices varied significantly, and it became clear that offering consistent, specific guidance was not feasible. In many situations, this level of abstraction may be appropriate, but with the rapid evolution of GenAI and the potential for misconduct if guidance is misinterpreted, such abstraction carries risk. Through our partnership, we found ways to offer concrete, contextualised guidance across a range of courses, balancing specific examples with the diversity of practice across the faculty.

There were, of course, challenges. Student feedback indicated that the module took longer to complete than intended, highlighting the need to condense some of the content. Another reflection concerned the cognitive trade-offs of AI use. While students valued AI for efficiency (e.g., summarising content), we recognised the risk of bypassing deeper disciplinary thinking. To address this, the Guide emphasised strategies for verifying AI outputs, prompting reflection, and re-engaging with original materials, ensuring AI supported rather than replaced critical learning processes. Several students said they only discovered the guide by chance, highlighting a gap in its promotion and integration. To be impactful, the guide must be embedded meaningfully into courses, something we aim to improve in future iterations.

One concern raised during the SSP project was the fear that we might invest significant time creating a resource that would ultimately go unused. As one of our team expressed,

“In order for the guide to work, academics might need to use it.”

When the guide is treated as an optional extra, or a “tick-the-box” resource, its impact is limited. Blended learning approaches where the guide is referenced in class discussions, assessment briefings, or tutorials were seen as more effective as reflected in Park & Doo (2024)’s work. Behind the scenes, the work of co-designing such a resource also revealed emotional labour. Our team sometimes faced frustration when encountering conflicting views or dismissive attitudes about GenAI. As one team member reflected,

“Dealing with extremely different opinions among teaching staff can be frustrating. Sometimes, negative comments are hurtful.”

These moments reminded us that innovation requires not only good design but resilience, collaboration, and shared purpose. Looking ahead, we carry forward these lessons with renewed commitment: to centre student voices, foster educator dialogue, and continue building an ethically grounded, pedagogically sound approach to GenAI integration in higher education.

Conclusion: Key Principles for Your Own Practice

This project reinforced our belief that proactive, empathetic pedagogy is an effective response to disruptive technological change. For fellow educators navigating this new landscape, our experience suggests the following key principles:

- Co-design with Students: Students may not be experts in pedagogy, but they are experts in their lived experience, digital habits, and emotional responses to learning. This form of expertise was critical for shaping resources that addressed authentic needs, while being complemented by staff pedagogical and disciplinary knowledge.

- Bridge the Gap Between Policy and Practice: Don’t just tell students the rules. Use concrete examples and interactive scenarios to show them how to apply those rules in the messy, real-world contexts of their coursework.

- Use Multimodality: To keep students engaged with complex topics, use a variety of media formats. Videos, interactive elements, and clear visuals can make abstract ethical concepts more accessible and memorable.

- Address the Anxiety, Not Just the Technology: Recognise that student uncertainty is an emotional issue, not just a cognitive one. Frame your guidance with empathy to build trust and create a safe environment for learning and experimentation.

- Embrace the Change and Model Lifelong Learning: As GenAI continues to evolve, educators must also deepen their own understanding. Firsthand engagement with AI tools helps build practical knowledge, but technical familiarity alone is not enough. AI can be used meaningfully in teaching, if we reflect on the ethical implications of its integration (Mishra et al., 2023). Emerging research suggests that teachers require a combination of technological, pedagogical, and ethical knowledge to effectively guide students in AI-supported learning environments. This aligns with expanded frameworks such as Intelligent-TPACK, which reconceptualises the teacher’s role not just as a user of technology, but as an orchestrator of ethically informed, pedagogically grounded AI-based instruction (Celik, 2023).

Key Lessons and Unexpected Insights

Through this journey, three key lessons emerged that we believe are transferable to other contexts:

- The Paradox of Support: We discovered that students often need the most guidance precisely when they’re least likely to seek it. The anxiety about “doing the wrong thing” created a paralysis that prevented students from engaging with available resources. This taught us the importance of proactive, embedded support rather than optional add-on resources.

- The Power of Peer Legitimacy: An unexpected insight was how student partners became informal ambassadors for the guide. Their involvement gave the resource credibility among peers that no amount of institutional endorsement could achieve. Consider how peer champions might amplify your own initiatives.

- The Evolution of Ethics: We learned that ethical AI use isn’t a fixed set of rules but an evolving practice requiring ongoing judgment. Our most successful components were those that developed students’ decision-making frameworks rather than prescriptive dos and don’ts.

Advice for Educators

For those engaging on similar work, we offer this advice:

- Start with Empathy, not Technology. Understand the emotional and cognitive dimensions of student experience before designing solutions.

- Embrace Productive Discomfort. The tensions between efficiency and deep learning, between support and self-reliance, cannot be resolved, only navigated thoughtfully.

- Build for Adaptation, not Adoption. Create resources that educators can customise for their contexts rather than one-size-fits-all solutions.

- Measure What Matters. Look beyond usage statistics to assess impact on student confidence, metacognitive development, and learning quality.

Ultimately, generative AI is not a temporary disruption, it is becoming a defining feature of academic and professional life. Our role as educators is not to restrict its use, but to guide it. By listening to our students and collaborating with them, we can move them from a place of anxiety to a position of agency, empowering them to become confident, critical, and ethical users of the tools that will shape their futures.

Acknowledgements

We would like to extend our gratitude to Faculty of Science staff and wider UQ community who contributed significantly on this project. We are also grateful to the Student–Staff Partnership team for their ongoing support throughout 2024–2025. In particular, we wish to thank:

- Academic consultants: Professor Lydia Kavanagh, Professor Gwendolyn Lawrie, Professor Scott Chapman, Dr Peter Crisp, Dr Suresh Krishnasamy, Dr Nan Ye, Dr William Woodgate, Professor Stuart Phinn

- Professional staff consultants: Dale Hansen, Garvin Keir, Kellie Ashley, and the UQ Student-Staff Partnership team

- Student partners: Dashiell Young, Hana Nuriy Rahmawati Purwanto, Lachlan Miller, Rupashi Nehra

- Editors: Associate Professor Rachel Fitzgerald

Ethical approval

This project received ethical approval from The University of Queensland’s Human Research Ethics Committee (2024/HE001346).

AI Use Declaration

This chapter was prepared with assistance from ChatGPT and Claude, which supported aspects of writing and editing for clarity. All ideas, findings, and interpretations are the authors’ own, and the AI did not generate or analyse primary data or replace academic judgement.

References

Celik, I. (2023). Towards Intelligent-TPACK: An empirical study on teachers’ professional knowledge to ethically integrate artificial intelligence (AI)-based tools into education. Computers in Human Behavior, 138, 107468. https://doi.org/10.1016/j.chb.2022.107468

Cukurova, M., & Miao, F. (2024). AI competency framework for teachers. UNESCO Publishing. https://unesdoc.unesco.org/ark:/48223/pf0000391104

SLOCI (Student Led-Observation for Course Improvement). (2024). Semester 2, 2024 Faculty of Science generative AI focus group. Faculty of Science. The University of Queensland.

SLOCI (Student Led-Observation for Course Improvement) (2023). Semester 2, 2023 Faculty of Science generative AI project final report. Faculty of Science. The University of Queensland.

Lodge, J. M., Kennedy, G., Lockyer, L., Arguel, A., & Pachman, M. (2018, June). Understanding difficulties and resulting confusion in learning: An integrative review. In Frontiers in Education (Vol. 3, p. 49). Frontiers Media SA. https://doi.org/10.3389/feduc.2018.00049

Matthews, K. E. (2017). Five Propositions for Genuine Students as Partners Practice. International Journal for Students as Partners, 1(2), 1–9. https://doi.org/10.15173/ijsap.v1i2.3315

Mayer, R. E. (2020). Multimedia learning (3rd ed.). Cambridge University Press.

McDonald, N., Johri, A., Ali, A., & Collier, A. H. Generative artificial intelligence in higher education: Evidence from an analysis of institutional policies and guidelines. Computers in Human Behavior: Artificial Humans, 100121. https://doi.org/10.1016/j.chbah.2025.100121

Miao, F., & Shiohira, K. (2024). AI competency framework for students. UNESCO Publishing. https://unesdoc.unesco.org/ark:/48223/pf0000391105

Mishra, P., Warr, M., & Islam, R. (2023). TPACK in the age of ChatGPT and Generative AI. Journal of Digital Learning in Teacher Education, 39(4), 235-251. https://doi.org/10.1080/21532974.2023.2247480

Park, Y., & Doo, M. Y. (2024). Role of AI in Blended Learning: A Systematic Literature Review. International Review of Research in Open and Distance Learning, 25(1), 164–196. https://doi.org/10.19173/irrodl.v25i1.7566

Peterson, K., & McCleery, E. (2014). Evidence brief: The effectiveness of mandatory computer-based trainings on government ethics, workplace harassment, or privacy and information security-related topics. Department of Veterans Affairs (US). https://www.ncbi.nlm.nih.gov/books/NBK384612/

TEQSA (2024). Gen AI strategies for Australian higher education: Emerging practice. https://www.teqsa.gov.au/sites/default/files/2024-11/Gen-AI-strategies-emerging-practice-toolkit.pdf

Taptamat, N., Holt, M., & McGrath, D. (2024). Generative AI for learning sciences guide implementation. Faculty of Science. The University of Queensland.

Turnitin. (2024). Crossroads: Navigating the intersection of AI in education. https://www.turnitin.com/whitepapers/ai-in-education

Wang, F., & Hannafin, M. J. (2005). Design-based research and technology-enhanced learning environments. Educational technology research and development, 53(4), 5-23.

Wang, K. D., Wu, Z., Tufts, L. N. I., Wieman, C., Salehidi, S., & Haber, N. (2024). Scaffold or crutch? Examining college students’ use and views of generative AI tools for STEM education. Physics Education https://doi.org/10.48550/arXiv.2412.02653

Zimmerman, B. J. (2002). Becoming a self-regulated learner: An overview. Theory into Practice, 41(2), 64–70. https://doi.org/10.1207/s15430421tip4102_2