Learning outcomes

The learning outcomes of this chapter are:

- Manually apply n-step reinforcement learning approximation to solve small-scale MDP problems.

- Design and implement n-step reinforcement learning to solve medium-scale MDP problems automatically.

- Argue the strengths and weaknesses of n-step reinforcement learning.

Overview

In the previous sections on of this chapter, we looked at two fundamental temporal difference (TD) methods for reinforcement learning: Q-learning and SARSA.

These two methods have some weaknesses in this basic format:

- Unlike Monte-Carlo methods, which reach a reward and the backpropagate this reward, TD methods use bootstrapping (they estimate the future discounted reward using [latex]Q(s,a)[/latex]), which means that for problems with sparse rewards, it can take a long time to for rewards to propagate throughout a Q-function.

- Rewards can be sparse, meaning that there are few state/actions that lead to non-zero rewards. This is problematic because initially, reinforcement learning algorithms behave entirely randomly and will struggle to find good rewards.

- Both methods estimate a Q-function [latex]Q(s,a)[/latex], and the simplest way to model this is via a Q-table. However, this requires us to maintain a table of size [latex]|A| \times |S|[/latex], which is prohibitively large for any non-trivial problem.

- Using a Q-table requires that we visit every reachable state many times and apply every action many times to get a good estimate of [latex]Q(s,a)[/latex]. Thus, if we never visit a state [latex]s[/latex], we have no estimate of [latex]Q(s,a)[/latex], even if we have visited states that are very similar to [latex]s[/latex].

To get around limitations 1 and 2, we are going to look at n-step temporal difference learning: ‘Monte Carlo’ techniques execute entire episodes and then backpropagate the reward, while basic TD methods only look at the reward in the next step, estimating the future wards. n-step methods instead look [latex]n[/latex] steps ahead for the reward before updating the reward, and then estimate the remainder. In future parts of these notes, we’ll look at techniques for mitigating limitations 3 and 4.

n-step TD learning comes from the idea that Monte-Carlo methods can be useful for providing more information for Q-function updates than just temporal difference estimates. Monte Carlo methods uses ‘deep backups’, where entire episodes are executed and the reward backpropagated. Methods such as Q-learning and SARSA use ‘shallow backups’, only using the reward from the 1-step ahead. n-step learning finds the middle ground: only update the Q-function after having explored ahead [latex]n[/latex] steps.

n-step TD learning

We will look at n-step reinforcement learning, in which [latex]n[/latex] is the parameter that determines the number of steps that we want to look ahead before updating the Q-function. So for [latex]n=1[/latex], this is just “normal” TD learning such as Q-learning or SARSA. When [latex]n=2[/latex], the algorithm looks one step beyond the immediate reward, [latex]n=3[/latex] it looks two steps beyond, etc.

Both Q-learning and SARSA have an n-step version. We will look at n-step learning more generally, and then show an algorithm for n-step SARSA. The version for Q-learning is similar.

Intuition

The details and algorithm for n-step reinforcement learning making it seem more complicated than it really is. At an intuitive level, it is quite straightforward: at each step, instead of updating our Q-function or policy based on the reward received from the previous action, plus the discounted future rewards, we update it based on the last [latex]n[/latex] rewards received, plus the discounted future rewards from [latex]n[/latex] states ahead.

Consider the following interative gif, which shows the update over an episode of five actions. The bracket represents a window size of [latex]n=3[/latex]:

At time [latex]t=0[/latex], no update can be made because there is no action.

At time [latex]t=1[/latex], after action [latex]a_0[/latex], no update is done yet. In standard TD learning, we would update [latex]Q(s_0, s_0)[/latex] here. However, because we have only one reward instead of three ([latex]n=3[/latex]), we delay the update.

At time [latex]t=2[/latex], after action [latex]a_1[/latex], again we do not update because we have just two rewards, instead of three.

At time [latex]t=3[/latex], after action [latex]a_2[/latex], we have three rewards, so we do our first update. But note: we update [latex]Q(s_0, a_0)[/latex] – the first state-action pair in the sequence, rather than the most recent state action pair [latex](s_2,a_2)[/latex]. Notice that we consider the rewards [latex]r_1[/latex], [latex]r_2[/latex], and [latex]r_3[/latex] (appropriately discounted), and use the discounted future reward [latex]V(s_3)[/latex]. Effectively, the update of [latex]Q(s_0, a_0)[/latex] “looks forward” three actions into the future instead one, because [latex]n=3[/latex].

At time [latex]t=4[/latex], after action [latex]a_3[/latex], the update is similar: we now update [latex]Q(s_1, a_1)[/latex].

At time [latex]t=5[/latex], it is similar again, except that state [latex]s_5[/latex] is a terminal state, so we do not include the discounted future reward – there is no state [latex]s_6[/latex] such that we can estimate [latex]V(s_6)[/latex], so it is omitted.

At time [latex]t=6[/latex], we can see that the window slides beyond the length of the episode. However, even though we have reached the terminal state, we continue updating — this time updating [latex]Q(s_3, s_3)[/latex]. We need to do this because we have still not updated the Q-values for all state-action pairs that we have executed.

At time [latex]t=7[/latex], we do the final update — this time for [latex]Q(s_4,a_4)[/latex]; and the episode is complete.

So, we can see that, intuitively, [latex]n[/latex]-step reinforcement learning is quite straightfoward. However, to implement this, we need data structures to keep track of the last [latex]n[/latex] states, actions, and rewards, and modifications to the standard TD learning algorithm to both delay Q-value updates in the first [latex]n[/latex] steps of an episode, and to continue updating beyond the end of the episode for ensure the last [latex]n[/latex] state-action pairs are updated. This “book-keeping” code can be confusing at first, unless we already have an intuitive understanding of what it achieves.

Discounted Future Rewards (again)

When calculating a discounted reward over a episode, we simply sum up the rewards over the episode:

[latex]G_t = r_1 + \gamma r_2 + \gamma^2 r_3 + \gamma^3 r_4 + \ldots[/latex]

We can re-write this as:

[latex]G_t = r_1 + \gamma(r_2 + \gamma(r_3 + \gamma(r_4 + \ldots)))[/latex]

If [latex]G_t[/latex] is the value received at time-step [latex]t[/latex], then

[latex]G_t = r_t + \gamma G_{t+1}[/latex]

In TD(0) methods such as Q-learning and SARSA, we do not know [latex]G_{t+1}[/latex] when updating [latex]Q(s,a)[/latex], so we estimate using bootstrapping:

[latex]G_t = r_t + \gamma \cdot V(s_{t+1})[/latex]

That is, the reward of the entire future from step [latex]t[/latex] is estimated as the reward at [latex]t[/latex] plus the estimated (discounted) future reward from [latex]t+1[/latex]. [latex]V(s_{t+1})[/latex] is estimated using the maximum expected return (Q-learning) or the estimated value of the next action (SARSA).

This is a one-step return.

Truncated Discounted Rewards

However, we can estimate a two-step return:

[latex]G^2_t = r_t + \gamma r_{t+1} + \gamma^2 V(s_{t+2})[/latex]

a three-step return:

[latex]G^3_t = r_t + \gamma r_{t+1} + \gamma^2 r_{t+2} + \gamma^3 V(s_{t+3})[/latex]

or n-step returns:

[latex]G^n_t = r_t + \gamma r_{t+1} + \gamma^2 r_{t+2} + \ldots \gamma^n V(s_{t+n})[/latex]

In this above expression [latex]G^n_t[/latex] is the full reward, truncated at [latex]n[/latex] steps, at time [latex]t[/latex].

The basic idea of n-step reinforcement learning is that we do not update the Q-value immediately after executing an action: we wait [latex]n[/latex] steps and update it based on the n-step return.

If [latex]T[/latex] is the termination step and [latex]t + n \geq T[/latex], then we just use the full reward.

In Monte-Carlo methods, we go all the way to the end of an episode. Monte-Carlo Tree Search is one such Monte-Carlo method, but there are others that we do not cover.

Updating the Q-function

The update rule is then different. First, we need to calculate the truncated reward for [latex]n[/latex] steps, in which [latex]\tau[/latex] is the time step that we are updating for (that is, [latex]\tau[/latex] is the action taken [latex]n[/latex] steps ago):

[latex]G \leftarrow \sum^{\min(\tau+n, T)}_{i=\tau+1}\gamma^{i-\tau-1}r_i[/latex]

This just sums the discounted rewards from time step [latex]\tau+1[/latex] until either [latex]n[/latex] steps ([latex]\tau+n[/latex]) or termination of the episode ([latex]T[/latex]), whichever comes first.

Then calculate the n-step expected reward:

[latex]\text{If } \tau+n < T \text{ then } G \leftarrow G + \gamma^n Q(s_{\tau+n}, a_{\tau+n}).[/latex]

This adds the future expect reward if we are not at the end of the episode (if [latex]\tau+n < T[/latex]).

Finally, we update the Q-value:

[latex]Q(s_{\tau}, a_{\tau}) \leftarrow Q(s_{\tau}, a_{\tau}) + \alpha[G - Q(s_{\tau}, a_{\tau}) ][/latex]

In the update rule above, we are using a SARSA update, but a Q-learning update is similar.

n-step SARSA

While conceptually this is not so difficult, an algorithm for doing n-step learning needs to store the rewards and observed states for [latex]n[/latex] steps, as well as keep track of which step to update. An algorithm for n-step SARSA is shown below.

Algorithm 6 (n-step SARSA)

This is similar to standard SARSA, except that we are storing the last [latex]n[/latex] states, actions, and rewards; and also calculating the rewards on the last five rewards rather than just one. The variables [latex]ss[/latex], [latex]as[/latex], and [latex]rs[/latex] as the list of the last [latex]n[/latex] states, actions, and rewards respectively. We use the syntax [latex]ss_i[/latex] to get the [latex]i^{th}[/latex] element of the list, and the Python-like syntax [latex]ss_{[1:n+1]}[/latex] to get the elements between indices 1 and [latex]n+1[/latex] (remove the first element).

As with SARSA and Q-learning, we iterate over each step in the episode. The first branch simply executes the selected action, selects a new action to apply, and stores the state, action, and reward.

It is the second branch where the actual learning happens. Instead of just updating with the 1-step reward [latex]r[/latex], we use the [latex]n[/latex]-step reward [latex]G[/latex]. This requires a bit of “book-keeping”. The first thing we do is calculate [latex]G[/latex]. This simply sums up the elements in the reward sequence [latex]rs[/latex], but remembering that they must be discounted based on their position in [latex]rs[/latex]. The next line adds the TD-estimate [latex]y^n Q(s',a')[/latex] to [latex]G[/latex], but only is the most recent state is not a terminal state. If we have already reached the end of the episode, then we must exclude the TD-estimate of the future reward, because there will be no such future reward. Of importance, also note that we multiple this by [latex]\gamma^n[/latex] instead of [latex]\gamma[/latex]. Why? This because the future estimated reward is [latex]n[/latex] steps from state [latex]ss_0[/latex]. The [latex]n-step[/latex] reward in [latex]G[/latex] somes first. Then we do the actualy update, which updates the state-action pair [latex](ss_0, as_0)[/latex] that is [latex]n[/latex]-steps back.

The final part of this branch removes the first element from the list of states, actions, and rewards, and moves on to the next state.

What is the effect of all of the computation? This algorithm differs from standard SARSA as follows: it only updates a state-action pair after it has seen the next [latex]n[/latex] rewards that are returned, rather than just the single next reward. This means that there are no updates until the [latex]n^{th}[/latex] steps of the episode; and no TD-estimate of the future reward in the last [latex]n[/latex] steps of the episode.

Computationally, this is not much worse than 1-step learning. We need to store the last [latex]n[/latex] states, but the per-step computation is small and uniform for n-step, just as for 1-step.

Example – [latex]n[/latex]-step SARSA update

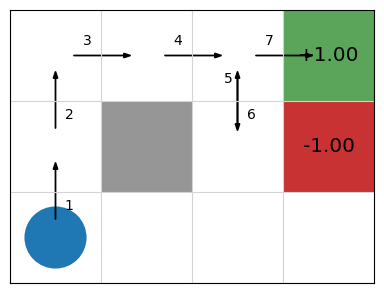

Consider our simple 2D navigation task, in which we do not know the probability transitions nor the rewards. Initially, the reinforcement learning algorithm will be required to search randomly until it finds a reward. Propagating this reward back n-steps will be helpful.

Imagine the first episode consisting of the following (very lucky!) episode:

Assuming [latex]Q(s,a)=0[/latex] for all [latex]s[/latex] and [latex]a[/latex], if we traverse the episode the labelled episode, what will our Q-function look like for a 5-step update with [latex]\alpha=0.5[/latex] and [latex]\gamma=0.9[/latex]?

For the first [latex]n-1[/latex] steps of the episode, no update is made to the Q-values, but rewards and states are stored for future processing.

On step 5, we reach the end of our n-step window, and we start to update values because [latex]|rs|=n[/latex]. We calculate [latex]G[/latex] from [latex]rs[/latex] and update [latex]Q(ss_0, as_0)[/latex], and then similarly for the 6[latex]^{th}[/latex] step:

[latex]\begin{array}{llll} & \text{Step 5} & rs = \langle 0, 0, 0, 0, 0\rangle\\ & & G = \gamma^0 rs_0 + \ldots + \gamma^4 rs_4 + \gamma^5 Q((2,1), Up) = 0\\ & & Q((0,0), Up) = 0\\[1mm] & \text{Step 6} & rs = \langle 0, 0, 0, 0, 0\rangle\\ & & G = \gamma^0 rs_0 + \ldots + \gamma^4 rs_4 + \gamma^5 Q((2,2), Right) = 0\\ & & Q((0,1), Up) = 0 + 0.5[0 - 0] = 0 \end{array}[/latex]

We can see that there are no rewards in the first five steps, so the Q-values first two state-action pairs in the sequence [latex]Q((0,0), Up)[/latex] and [latex]Q((0,1), Up)[/latex] both remain 0, their original values.

When we reach step 7, however, we receive a reward. We can then update the Q-value [latex]Q((0,2), Right)[/latex] of the state-action pair at step 3:

[latex]\begin{array}{llll} & \text{Step 7} & rs = \langle 0, 0, 0, 0, 1\rangle\\ & & G = \gamma^0 rs_0 + \ldots + \gamma^4 rs_4 + \gamma^5 Q((3,2), Terminate) = 0.9^4 \cdot 1 = 0.6561\\ & & Q((0,2), Right) = 0 + 0.5[0.6561 - 0] = 0.32805 \end{array}[/latex]

From this point, [latex]s[/latex] is a terminal state,, so we no longer select and execute actions, nor store the rewards, actions, and states. However, we continue to update the steps in the episode, leaving off [latex]Q(ss_5, as_5)[/latex] because there is no future discount reward beyond the end of the episode:

[latex]\begin{array}{llll} & \text{Step 8} & rs = \langle 0, 0, 0, 1\rangle\\ & & G = \gamma^0 rs_0 + \ldots + \gamma^4 rs_3 = 0.9^3 \cdot 1 = 0.729\\ & & Q((1,2), Right) = 0 + 0.5[0.729 - 0] = 0.3645\\[1mm] & \text{Step 9} & rs = \langle 0, 0, 1\rangle\\ & & G = \gamma^0 rs_0 + \ldots + \gamma^3 rs_2 = 0.9^2 \cdot 1 = 0.81\\ & & Q((2,2), Down) = 0 + 0.5[0.81 - 0] = 0.405\\[1mm] & \text{Step 10} & rs = \langle 0, 1\rangle\\ & & G = \gamma^0 rs_0 + \ldots + \gamma^2 rs_1 = 0.9^1 \cdot 1 = 0.9 = 0.9\\ & & Q((2,1), Up) = 0 + 0.5[0.9 - 0] = 0.45\\[1mm] & \text{Step 11} & rs = \langle 1\rangle\\ & & G = \gamma^0 rs_0 = 0.9^0 \cdot 1 = 1\\ & & Q((2,2, Right) = 0 + 0.5[1 \cdot 1 - 0] = 0.5 \end{array}[/latex]

At this point, there are no further states left to update, so the inner loop terminates, and we start a new episode.

Implementation

Below is a Python implementation of n-step temporal difference learning. We first implement an abstract superclass NStepReinforcementLearner, which contains most of the code we need, except the part that determines the value of state [latex]s'[/latex] in the Q-function update, which is left to the subclass so we can support both n-step Q-learning and n-step SARSA:

class NStepReinforcementLearner:

def __init__(self, mdp, bandit, qfunction, n):

self.mdp = mdp

self.bandit = bandit

self.qfunction = qfunction

self.n = n

def execute(self, episodes=100):

for _ in range(episodes):

state = self.mdp.get_initial_state()

actions = self.mdp.get_actions(state)

action = self.bandit.select(state, actions, self.qfunction)

rewards = []

states = [state]

actions = [action]

while len(states) > 0:

if not self.mdp.is_terminal(state):

(next_state, reward, done) = self.mdp.execute(state, action)

rewards += [reward]

next_actions = self.mdp.get_actions(next_state)

if not self.mdp.is_terminal(next_state):

next_action = self.bandit.select(

next_state, next_actions, self.qfunction

)

states += [next_state]

actions += [next_action]

if len(rewards) == self.n or self.mdp.is_terminal(state):

n_step_rewards = sum(

[

self.mdp.discount_factor ** i * rewards[i]

for i in range(len(rewards))

]

)

if not self.mdp.is_terminal(state):

next_state_value = self.state_value(next_state, next_action)

n_step_rewards = (

n_step_rewards

+ self.mdp.discount_factor ** self.n * next_state_value

)

q_value = self.qfunction.get_q_value(

states[0], actions[0]

)

self.qfunction.update(

states[0],

actions[0],

n_step_rewards - q_value,

)

rewards = rewards[1 : self.n + 1]

states = states[1 : self.n + 1]

actions = actions[1 : self.n + 1]

state = next_state

action = next_action

""" Get the value of a state """

def state_value(self, state, action):

abstract

We inherit from this class to implement the n-step Q-learning algorithm:

from n_step_reinforcement_learner import NStepReinforcementLearner

class NStepQLearning(NStepReinforcementLearner):

def state_value(self, state, action):

max_q_value = self.qfunction.get_max_q(state, self.mdp.get_actions(state))

return max_q_value

Example – 1-step Q-learning vs 5-step Q-learning

Using the interactive graphic below, we compare 1-step vs. 5-step Q-learning over the first 20 episodes of learning in the GridWorld task. Using 1-step Q-learning, reaching the reward only informs the state from which it is reached in the first episode; whereas for 5-step Q-learning, it informs the previous five steps. Then, in the 2nd episode, if any action reaches a state that has been visited, it can access the TD-estimate for that state. There are five such states in 5-step Q-learning; and just one in 1-step Q-learning. On all subsequent iterations, there is more chance of encountering a state with a TD estimate and those estimates are better informed. The end result is that the estimates ‘spread’ throughout the Q-table more quickly:

Values of n

Can we just increase [latex]n[/latex] to be infinity so that we get the reward for the entire episode? Doing this is the same as Monte-Carlo reinforcement learning, as we would no longer use TD estimates in the update rule. As we have seen, this leads to more variance in the learning.

What is the best value for [latex]n[/latex] then? Unfortunately, there is no theoretically best value for [latex]n[/latex]. It depends on the particular application and reward function that is being trained. In practice, it seems that values of [latex]n[/latex] around 4-8 give good updates because we can easily assign credit to each of the 4-8 actions; that is, we can tell whether the 4-8 actions in the lookahead contributed to the score, because we use the TD estimates.

Takeaways

- n-step reinforcement learning propagates rewards back [latex]n[/latex] steps to help with learning.

- It is conceptually quite simple, but the implementation requires a lot of ‘book-keeping’.

- Choosing a value of [latex]n[/latex] for a domain requires experimentation and intuition.