11 Discrete Distributions

[latex]\newcommand{\pr}[1]{P(#1)} \newcommand{\var}[1]{\mbox{var}(#1)} \newcommand{\mean}[1]{\mbox{E}(#1)} \newcommand{\sd}[1]{\mbox{sd}(#1)} \newcommand{\Binomial}[3]{#1 \sim \mbox{Binomial}(#2,#3)} \newcommand{\Student}[2]{#1 \sim \mbox{Student}(#2)} \newcommand{\Normal}[3]{#1 \sim \mbox{Normal}(#2,#3)} \newcommand{\Poisson}[2]{#1 \sim \mbox{Poisson}(#2)}[/latex]

Binomial Distribution

Counting occurrences of some random event is a common way of arriving at a discrete random variable. One of the simplest counting distributions is the Binomial distribution. As an example, let [latex]X[/latex] be the number of females in a sample of 3 individuals drawn independently from a population with a 1:1 sex ratio. There are 8 possible outcomes for the sexes of the 3 individuals:

FFF, FFM, FMF, FMM, MFF, MFM, MMF, MMM.

Since the probability of a female or male is 0.5, these 8 possibilities are all equally likely, with

\[ \pr{\mbox{FFF}} = 0.5 \times 0.5 \times 0.5 = 0.125, \]

and so on. If we count the number of females then we find that [latex]X[/latex] has only 4 values, not all equally likely. We can define the probability function on [latex]X[/latex] by [latex]\pr{X = 0} = 0.125[/latex], [latex]\pr{X=1} = 0.375[/latex], [latex]\pr{X=2} = 0.375[/latex], and [latex]\pr{X=3} = 0.125[/latex].

Because the random variable is discrete, we can list the values like this, or give them in a table, as in the table below.

Probability distribution of female count in a sample of 3

| [asciimath]x[/asciimath] | [asciimath]0[/asciimath] | [asciimath]1[/asciimath] | [asciimath]2[/asciimath] | [asciimath]3[/asciimath] |

| [asciimath]P(X=x)[/asciimath] | [asciimath]0.125[/asciimath] | [asciimath]0.375[/asciimath] | [asciimath]0.375[/asciimath] | [asciimath]0.125[/asciimath] |

The probability function is plotted in the following figure.

A Bernoulli trial is a random process which has two possible outcomes, usually labelled “success” and “failure”. The count of successes in a series of independent Bernoulli trials, with a constant probability of success, has what is called the Binomial distribution. If [latex]X[/latex] is the count from [latex]n[/latex] trials with success probability [latex]p[/latex] then we write [latex]\Binomial{X}{n}{p}[/latex].

The probability distribution in the previous table is Binomial(3,0.5) distribution. Let us suppose that the female probability was [latex]p[/latex] and see where the probabilities for Binomial(3,[latex]p[/latex]) come from. Assuming the trials are independent, the probability of getting three females, FFF, is

\[ \pr{X = 3} = p \times p \times p = p^3. \]

The probability of FFM, 2 females, is [latex]pp(1-p) = p^2(1-p)[/latex]. But we can also get 2 females through FMF or MFF, each also with probability [latex]p^2(1-p)[/latex], so

\[ \pr{X = 2} = 3p^2(1-p). \]

Similarly, there are 3 combinations which give 1 female, each with probability [latex]p(1-p)^2[/latex], so

\[ \pr{X = 1} = 3p(1-p)^2. \]

Finally,

\[ \pr{X = 0} = (1-p)^3. \]

This distribution is summarised in the following table. You can check that when [latex]p = 0.5[/latex] we get the values shown in our earlier table.

Probability function for the number of females in a sample of size 3

| [asciimath]x[/asciimath] | 0 | 1 | 2 | 3 |

| [asciimath]P(X=x)[/asciimath] | [asciimath](1-p)^3[/asciimath] | [asciimath]3p(1-p)^2[/asciimath] | [asciimath]3p^2(1-p)[/asciimath] | [asciimath]p^3[/asciimath] |

The general formula for [latex]\pr{X = x}[/latex] when [latex]\Binomial{X}{n}{p}[/latex] is

\[ \pr{X = x} = {n \choose x} p^x(1-p)^{n-x}, \]

where

\[ {n \choose x} = \;^nC_x = \frac{n(n-1)\cdots(n-x+1)}{x(x-1) \cdots 1} = \frac{n!}{x!(n-x)!} \]

is the Binomial coefficient, available on many calculators. The Binomial coefficient counts the number of ways in which [latex]x[/latex] objects can be chosen from [latex]n[/latex] possibilities when order doesn’t matter. For example, suppose we take a sample of 2 people from a group of 10. There are [latex]{10 \choose 2} = 45[/latex] different pairs of people we could choose.

The binomial distribution table gives Binomial probabilities for some simple values of [latex]p[/latex] and small values of [latex]n[/latex] for use in our examples. The cumulative binomial distribution table gives cumulative Binomial probabilities, which will also be useful (although they can be calculated by adding up the corresponding values in the original table. In practice software will calculate both of these for us.

Note that if [latex]p = 0.5[/latex] then the Binomial distribution is symmetric (see the previous figure and this figure in Chapter 17). If [latex]p \lt 0.5[/latex] then the distribution is skewed to the right (see this other figure in Chapter 17) while if [latex]p \gt 0.5[/latex] then it is skewed to the left.

Finite Populations

An obvious use of Binomial distributions is in modelling survey data. Suppose 50% of all university students are male and that we take a random sample of 100 students. The probability of an individual student being male is 0.5 and so our survey seems to be a series of Bernoulli trials and so the count of “yes” would be Binomial.

However, if the first person we survey is male then the probability in the remainder of the population will be slightly less than 0.5. Thus the trials will not be strictly independent. This effect will be small, especially if the population is much larger than the sample size. We will thus use the Binomial distribution to model such surveys.

If the population is finite and the size of the population is known then you can also use the hypergeometric distribution (in a later section) to calculate exact sample probabilities.

Sample Counts and Proportions

The rules in Chapter 10 can be used to derive formulas for the expected value and standard deviation of more complicated random variables, such as one with a Binomial distribution. This section shows the algebra needed for obtaining the formulas. You should read through this and try to follow the steps. There is no need to learn the proofs but it is useful to see that all of the formulas which we will encounter later in the book are not at all magical. They can all be shown using such fairly simple (though sometimes messy) algebra.

Sample Count

So let [latex]\Binomial{X}{n}{p}[/latex], the count of successes from [latex]n[/latex] independent Bernoulli trials each with success probability [latex]p[/latex]. To find the expected value and standard deviation of [latex]X[/latex], define new random variables [latex]B_1, B_2, \ldots, B_n[/latex] by

\[ B_j = \left\{ \begin{array}{ll}

1, & \mbox{ if }j\mbox{ th trial is success} \\

0, & \mbox{ if }j\mbox{ th trial is failure}. \\

\end{array} \right.

\]

Since each [latex]B_j[/latex] has only two possible outcomes it is easy to give its probability function, shown in the table below.

Probability function for [asciimath]B_j[/asciimath]

| [asciimath]b[/asciimath] | 0 | 1 |

| [asciimath]P(B_j=b)[/asciimath] | [asciimath]1-p[/asciimath] | [asciimath]p[/asciimath] |

We can work out the expected value and standard deviation directly from the definitions. Firstly,

\[ \mean{B_j} = p \times 1 + (1-p) \times 0 = p. \]

For example, if we pick a person at random from a population with 60% females then this says on average we will get 0.6 of a female.

Note that [latex]p[/latex] has two roles here and this is what sometimes makes working with sample counts and proportions a bit confusing. In the previous table, [latex]p[/latex] was a probability, the chance of a success. In [latex]\mean{B_j} = p[/latex] it now has units because it tells us what to expect for [latex]B_j[/latex] and [latex]B_j[/latex] is, for example, the number of females from a single Bernoulli trial. We have a 0.6 chance of getting a female which results in an average of 0.6 females per trial. Always the same number but a different interpretation.

The variance of each [latex]B_j[/latex] is

\begin{eqnarray*}

\var{B_j} & = & p \times (1-p)^2 + (1-p) \times (0 – p)^2 \\

& = & p(1 – 2p + p^2) + (1-p)p^2 \\

& = & p – 2p^2 + p^3 + p^2 – p^3 \\

& = & p – p^2 \\

& = & p(1 – p)

\end{eqnarray*}

so

\[ \sd{B_j} = \sqrt{p(1 – p)}. \]

Again this is in units of whatever we are counting.

Now what is [latex]B_1 + B_2 + \cdots + B_n[/latex]? Each time there is a success the corresponding [latex]B_j[/latex] is 1. So, for example, if we get 7 successes out of 10 trials then we will be adding up 7 lots of 1. This, of course, is 7. This sum will always give the count of successes. That is,

\[ X = B_1 + B_2 + \cdots + B_n. \]

Since we know the expected value and standard deviation of the [latex]B_j[/latex], we can use this to find the expected value and standard deviation of [latex]X[/latex]. Firstly,

\begin{eqnarray*}

\mean{X} & = & \mean{B_1 + B_2 + \cdots + B_n} \\

& = & \mean{B_1} + \mean{B_2} + \cdots + \mean{B_n} \\

& = & p + p + \cdots + p \\

& = & np.

\end{eqnarray*}

For standard deviation, since the [latex]B_j[/latex] are independent we have

\begin{eqnarray*}

\var{X} & = & \var{B_1 + B_2 + \cdots + B_n} \\

& = & \var{B_1} + \var{B_2} + \cdots + \var{B_n} \\

& = & p(1-p) + p(1-p) + \cdots + p(1-p) \\

& = & np(1-p).

\end{eqnarray*}

so [latex]\sd{X} = \sqrt{np(1-p)}[/latex].

For example, if 60% of a population are female and we pick a random sample of 10 individuals then we would expect to get [latex]10 \times 0.6 = 6[/latex] females in our sample. The standard deviation of the sample count is

\[ \sd{X} = \sqrt{10 \times 0.6 \times 0.4} = 1.55. \]

Note that these numbers have units — we expect to find 6 females in the sample with a standard deviation of 1.55 females.

Sample Proportion

The sample proportion is

\[ \hat{P} = \frac{X}{n} \]

so we can use the formulas from Chapter 10 to find

\[ \mean{\hat{P}} = \mbox{E}\left(\frac{X}{n}\right) = \frac{1}{n} \mean{X} = \frac{1}{n} (np) = p. \]

This is not surprising. If you take a sample of any size from a population with 60% females then you would expect your sample to have 60% females. We thus call [latex]\hat{P}[/latex] an unbiased estimator of [latex]p[/latex]. The standard deviation is

\[ \sd{\hat{P}} = \mbox{sd}\left(\frac{X}{n}\right) = \frac{1}{n} \sqrt{np(1-p)} = \sqrt{\frac{p(1-p)}{n}}. \]

Note that [latex]\sd{\hat{P}}[/latex] is proportional to [latex]1/\sqrt{n}[/latex], just as for [latex]\sd{\overline{X}}[/latex].

Maximum Likelihood Estimation

The sample proportion seems a sensible estimate of the population proportion since we’ve seen that [latex]\mean{\hat{P}} = p[/latex], that on average the sample proportion gives the population proportion. This is the main justification for estimators that we will use in this book. However, there is another important method that you should be familiar with, known as maximum likelihood estimation (MLE).

To see how this works we start by asking a slightly different question to before. For example, suppose we pick a sample of [latex]n= 20[/latex] people and find that [latex]X = 16[/latex] drink coffee. How can we estimate the proportion, [latex]p[/latex], of all the people that our sample came from that drink coffee?

The maximum likelihood approach is to consider all possible values for [latex]p[/latex] and work out the probability of actually getting [latex]X = 16[/latex] people who drink coffee in our sample. From the Binomial formula this is

\[ {20 \choose 16} p^{16}(1-p)^{20-16} = 4845p^{16}(1-p)^4. \]

For example, if [latex]p = 0.6[/latex] then [latex]\pr{X=16} = 0.035[/latex], while if [latex]p = 0.75[/latex] then [latex]\pr{X=16} = 0.130[/latex]. Thus it is more likely to get 16 coffee drinkers if [latex]p = 0.75[/latex] than if [latex]p = 0.6[/latex]. The obvious question then is which value of [latex]p[/latex] makes it most likely to get 16 coffee drinkers in our sample?

You can solve this question using calculus but here we will just look at the figure below. This is a plot of the likelihood function, [latex]\pr{X=16 \; | \; p}[/latex], the probability of obtaining our data for different values of the unknown population proportion [latex]p[/latex]. We see the value that maximises the likelihood function is [latex]p = 0.8[/latex], so 0.8 is our maximum likelihood estimate of the proportion of coffee drinkers in our population.

This isn’t a terribly surprising example since the ML estimate is just [latex]\hat{p}[/latex], the sample proportion! However, MLE can be used in more complicated settings where there is no obvious estimate for the population parameter of interest.

Hypergeometric Distribution

We have noted that the Binomial distribution is not strictly correct as a model for sampling from a finite population. To be precise, once we have made our measurement of success or failure on the first individual, the distribution of the remainder will change slightly, resulting in observations that are not independent. If the population is sufficiently large, at least 10 times the sample size, then this effect will be negligible and so we have worked with this approximation quite happily.

However, it is possible to calculate exact probabilities for counts from finite populations. Suppose [latex]X[/latex] is the count of successes in a sample of size [latex]n[/latex] from a population of size [latex]N[/latex]. For the Binomial distribution we also needed the success probability [latex]p[/latex] to make calculations. However, here we can describe the whole distribution by saying that [latex]N = N_1 + N_2[/latex], where [latex]N_1[/latex] is the number of “successes” and [latex]N_2[/latex] is the number of failures. For the first sample we would have [latex]p = \frac{N_1}{N}[/latex] but this would change immediately after the first sample.

As a specific example, suppose we take a sample of [latex]n[/latex] = 10 people from a group with [latex]N_1[/latex] = 20 females and [latex]N_2[/latex] = 30 males. What is the probability of getting exactly [latex]X = 6[/latex] females in our sample?

Firstly, the number of different samples we could possibly get is the number of ways of choosing 10 things from a collection of [latex]20 + 30 = 50[/latex] things. This is [latex]{50 \choose 10} = 10272278170[/latex]. Each of these is equally likely and we want to know how many have 6 females.

Now there are 6 spots in our sample for females and there are 20 females to choose from. Thus the number of ways we can fill these 6 spots is [latex]{20 \choose 6} = 38760[/latex]. For each of these possibilities we need to see how many ways we can fill the remaining 4 spots with males. This is similarly [latex]{30 \choose 4} = 27405[/latex]. Together the total number of samples that would give 6 females is

\[ 38760 \times 27405 = 1062217800. \]

Thus the probability of 6 females in the sample of 10 is

\[ \pr{X = 6} = \frac{1062217800}{10272278170} = 0.1034. \]

A Binomial calculation with [latex]p = \frac{20}{50} = 0.4[/latex] fixed gives [latex]\pr{X = 6} = 0.1115[/latex], showing the error involved in the Binomial assumption of independence.

Following these steps in general gives the probability of getting [latex]x[/latex] successes out of [latex]n[/latex] trials as

\[ \pr{X = x} = \frac{{N_1 \choose x} {N_2 \choose n-x}}{{N \choose n}}. \]

If [latex]X[/latex] has these probabilities then we say that it follows a hypergeometric distribution.

Poisson Distribution

William Gosset, a chemist at the Guiness Brewery in Dublin, described an experiment in which he spread a thin layer of mixture containing yeast cells, gelatine and water on a plate of glass. A small area was divided into 400 squares of equal size and a hemocytometer was used to count the number of yeast cells in each square. Guinness did not allow employees to publish at the time, so his work was published using the pseudonym Student (1907). The experimental results are reproduced here in the Appendix.

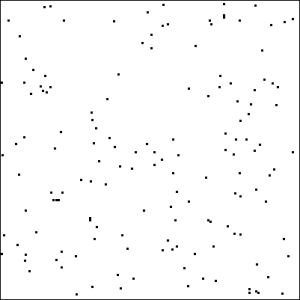

The distribution of the 400 counts is shown in the figure above. How should we model this data? It looks like a Binomial distribution because it is a count of outcomes. But what are the values of [latex]n[/latex] and [latex]p[/latex]? We can imagine a very fine grid with [latex]n[/latex] holes in it, each the size of a yeast cell, and each hole has a small probability [latex]p[/latex] of having a yeast cell in it. Yet [latex]n[/latex] is rather indefinite, some very large number that we cannot really quantify, and [latex]p[/latex] is correspondingly tiny.

However, we can still talk about the average yeast cell count, [latex]np[/latex]. From the data in the Appendix we find a sample mean of [latex]\overline{x} = 4.68[/latex] yeast cells per unit of area. We will model this type of data by working directly with the mean.

Gosset derived a “law” related to the Binomial distribution for this case of events where [latex]p[/latex] is very small and [latex]n[/latex] is very large. Since [latex]p[/latex] is small it is often referred to as the “law of rare events”. It gives the probability of a count [latex]x[/latex] by

\[ \pr{X = x} = \frac{e^{-\lambda} \lambda^x}{x!}, \]

where [latex]\lambda[/latex] is the mean of the counts. The table below shows a comparison between the observed values and the expected values from this distribution when [latex]\lambda = 4.68[/latex]. There seems to be a reasonable match, which we will return to in Chapter 22.

Observed and expected counts of yeast cells

| Yeast Cells | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9+ |

| Observed | 0 | 20 | 43 | 53 | 86 | 70 | 54 | 37 | 18 | 19 |

| Expected | 3.7 | 17.4 | 40.6 | 63.4 | 74.2 | 69.4 | 54.2 | 36.2 | 21.2 | 19.7 |

It turns out that Gosset had rediscovered a distribution that had earlier been described by the French mathematician Siméon Poisson in his 1837 book Recherchés sur la probabilité des jugements en matiére criminelle et matiére civile. This was a significant work but was not widely known in that century. In 1898 Ladislaus von Bortkiewicz published Das Gesetz der kleinen Zahlen (The Law of Small Numbers) which gave examples of a range of cheery settings in which the Poisson distribution arose, such as numbers of suicides and the numbers of deaths from horse kicks in the Prussian Army. Bulmer (1979) and Feller (1970) give further examples and applications.

As it was Bortkiewicz who first showed the wide applicability of the distribution, some authors have suggested it be called the “Bortkiewicz distribution”. However, if a random variable [latex]X[/latex] has the probability function given above then we say it has a Poisson distribution and write [latex]\Poisson{X}{\lambda}[/latex].

Aggregation and Over-dispersion

Since [latex]X[/latex] is discrete we can use the formulas from Chapter 10 to calculate the expected value and standard deviation of [latex]X[/latex]. However, unlike the Binomial distribution, here there is no upper limit for [latex]X[/latex] from a Poisson distribution. Even though it becomes very unlikely that large values could occur, [latex]\pr{X = x}[/latex] never equals 0. This just means that our sums are infinite sums. Such sums don’t always converge to a number but here they do and we find

\[ \mean{X} = \sum_{x=0}^{\infty} \frac{e^{-\lambda} \lambda^x}{x!} x = \lambda, \]

and then

\[ \var{X} = \sum_{x=0}^{\infty} \frac{e^{-\lambda} \lambda^x}{x!} (x – \lambda)^2 = \lambda. \]

We have implicitly used [latex]\mean{X} = \lambda[/latex] in the above examples. But the variance is also [latex]\lambda[/latex], and so looking at the ratio

\[ \mbox{CD } = \frac{s^2}{\overline{x}}, \]

the coefficient of dispersion, gives a simple way of seeing whether sample data comes from a Poisson distribution. This value, alternatively known as the index of dispersion or the variance-to-mean ratio, should be around 1 for Poisson data.

Often in biological studies there will be a larger number of extreme values than the Poisson distribution predicts. For example, if we were counting the spatial distribution of some vegetation then we would often find cells where a lot of vegetation is clumped, giving high counts. This aggregation gives a higher standard deviation, so we would find [latex]s^2 \gt \overline{x}[/latex], compared to the expected [latex]s^2 = \overline{x}[/latex] for a Poisson distribution. This would give a CD value greater than 1.

It is also possible to have a distribution where [latex]\sigma^2 \lt \mu[/latex]. Berkson et al. (1935) carried out a similar hemocytometer study to the one presented by Gosset but counting red blood cells instead of yeast cells. Over a number of experiments they found the general relation [latex]\sigma^2 = 0.85\mu[/latex]. This result is thought attributable to the forces acting between the red blood cells which encourages them to form a more uniform distribution across a surface, the opposite of the aggregation found in other biological settings. With a more uniform distribution, known as over-dispersion, the variability in the counts will be lower, leading to a lower [latex]s^2[/latex]. The result will be a value of CD less than 1.

Dispersion?

We have used terminology from ecology here where we take ‘dispersed’ to mean spread out in space. However, the term ‘dispersion’ is also used as a synonym for ‘variability’ in many other areas of statistics. In that case ‘over-dispersed’ would mean the variability is higher than it should be, corresponding to what we have called ‘aggregated’ above. Conversely, ‘under-dispersed’ would mean that the variability is less than it should be, precisely what we have called ‘over-dispersed’! This is something to be aware of but generally it will be clear from the context and the values of the statistics which terminology is being used.

Summary

- The Binomial distribution is an important example of a discrete random variable.

- [latex]\Binomial{X}{n}{p}[/latex] indicates that the random variable [latex]X[/latex] has a Binomial distribution with [latex]n[/latex] Bernoulli trials, each with success probability [latex]p[/latex].

- Binomial distributions are used to model sampling from finite populations.

- Maximum likelihood estimation can also be used to obtain the best estimate of a population proportion based on data.

- The Poisson distribution is a model for random counts of rare events.

- [latex]\Poisson{X}{\lambda}[/latex] indicates that [latex]X[/latex] is a random variable having a Poisson distribution with mean [latex]\lambda[/latex].

- The coefficient of dispersion gives a rough measure for determining whether data could come from a Poisson distribution.

Exercise 1

Suppose 30% of students have tried marijuana. What is the probability that at least half in a sample of 10 students have tried marijuana? Does the answer change for a sample of 20 students?

Exercise 2

Use the Binomial formula to verify some of the values in the binomial distribution table.

Exercise 3

Find a discrepancy between the binomial distribution and cumulative binomial distribution tables and explain why it might arise.

Exercise 4

Use the formulas from Chapter 10 to calculate [latex]\mean{X}[/latex] and [latex]\sd{X}[/latex] for [latex]X[/latex] given in an earlier table. Use the Binomial formulas from earlier in this chapter to check your calculations.

Exercise 5

Use the hypergeometric formula to verify that the probability of winning simple Keno is [latex]\frac{1}{4}[/latex], as we assumed in Chapter 8.

Exercise 6

Suppose [latex]X[/latex] has a Binomial distribution with [latex]\mean{X} = 8[/latex] and [latex]\var{X} = 6[/latex]. What number of trials, [latex]n[/latex], does [latex]X[/latex] involve?

Exercise 7

If you know a bit of calculus, show that the maximum likelihood estimate for [latex]p[/latex] really is 0.8 for the example in the MLE section. (Hint: The maximum occurs when the slope of the likelihood function is 0, so take the derivative of the function with respect to [latex]p[/latex] and solve to find when this is 0.)

Exercise 8

The “jackpot” bet in Keno is often to pick 10 numbers out of the 80 and have all 10 come up in the 20 chosen. Calculate the probability of this occurring using the hypergeometric formula.

The figure below gives an example of spatial data, similar to the yeast cell data. As Gosset did, divide the area into a grid, such as an 8-by-8 grid of 64 squares. Count the number of individuals in each square and make an appropriate plot of the count data. Calculate the mean count and use it to estimate [latex]\lambda[/latex] in a Poisson distribution. Using the Poisson formula, calculate the estimated proportions of each count and compare these to your observations. Does a Poisson model of this data seem appropriate?

Exercise 10

Suppose [latex]X[/latex] has a Poisson([latex]\lambda[/latex]) distribution. What value of [latex]\lambda[/latex] gives the probability [latex]\pr{X=5}[/latex] closest to 0.055?

Binomial distribution

| [asciimath]n[/asciimath] | [asciimath]x[/asciimath] | [asciimath]p=[/asciimath]0.01 | 0.05 | 0.10 | 0.20 | 0.25 | 0.30 | 0.40 | 0.50 |

|---|---|---|---|---|---|---|---|---|---|

| 1 | 0 | 0.990 | 0.950 | 0.900 | 0.800 | 0.750 | 0.700 | 0.600 | 0.500 |

| 1 | 0.010 | 0.050 | 0.100 | 0.200 | 0.250 | 0.300 | 0.400 | 0.500 | |

| 2 | 0 | 0.980 | 0.902 | 0.810 | 0.640 | 0.562 | 0.490 | 0.360 | 0.250 |

| 1 | 0.020 | 0.095 | 0.180 | 0.320 | 0.375 | 0.420 | 0.480 | 0.500 | |

| 2 | 0.002 | 0.010 | 0.040 | 0.062 | 0.090 | 0.160 | 0.250 | ||

| 3 | 0 | 0.970 | 0.857 | 0.729 | 0.512 | 0.422 | 0.343 | 0.216 | 0.125 |

| 1 | 0.029 | 0.135 | 0.243 | 0.384 | 0.422 | 0.441 | 0.432 | 0.375 | |

| 2 | 0.007 | 0.027 | 0.096 | 0.141 | 0.189 | 0.288 | 0.375 | ||

| 3 | 0.001 | 0.008 | 0.016 | 0.027 | 0.064 | 0.125 | |||

| 4 | 0 | 0.961 | 0.815 | 0.656 | 0.410 | 0.316 | 0.240 | 0.130 | 0.062 |

| 1 | 0.039 | 0.171 | 0.292 | 0.410 | 0.422 | 0.412 | 0.346 | 0.250 | |

| 2 | 0.001 | 0.014 | 0.049 | 0.154 | 0.211 | 0.265 | 0.346 | 0.375 | |

| 3 | 0.004 | 0.026 | 0.047 | 0.076 | 0.154 | 0.250 | |||

| 4 | 0.002 | 0.004 | 0.008 | 0.026 | 0.062 | ||||

| 5 | 0 | 0.951 | 0.774 | 0.590 | 0.328 | 0.237 | 0.168 | 0.078 | 0.031 |

| 1 | 0.048 | 0.204 | 0.328 | 0.410 | 0.396 | 0.360 | 0.259 | 0.156 | |

| 2 | 0.001 | 0.021 | 0.073 | 0.205 | 0.264 | 0.309 | 0.346 | 0.312 | |

| 3 | 0.001 | 0.008 | 0.051 | 0.088 | 0.132 | 0.230 | 0.312 | ||

| 4 | 0.006 | 0.015 | 0.028 | 0.077 | 0.156 | ||||

| 5 | 0.001 | 0.002 | 0.010 | 0.031 | |||||

| 6 | 0 | 0.941 | 0.735 | 0.531 | 0.262 | 0.178 | 0.118 | 0.047 | 0.016 |

| 1 | 0.057 | 0.232 | 0.354 | 0.393 | 0.356 | 0.303 | 0.187 | 0.094 | |

| 2 | 0.001 | 0.031 | 0.098 | 0.246 | 0.297 | 0.324 | 0.311 | 0.234 | |

| 3 | 0.002 | 0.015 | 0.082 | 0.132 | 0.185 | 0.276 | 0.312 | ||

| 4 | 0.001 | 0.015 | 0.033 | 0.060 | 0.138 | 0.234 | |||

| 5 | 0.002 | 0.004 | 0.010 | 0.037 | 0.094 | ||||

| 6 | 0.001 | 0.004 | 0.016 | ||||||

| 7 | 0 | 0.932 | 0.698 | 0.478 | 0.210 | 0.133 | 0.082 | 0.028 | 0.008 |

| 1 | 0.066 | 0.257 | 0.372 | 0.367 | 0.311 | 0.247 | 0.131 | 0.055 | |

| 2 | 0.002 | 0.041 | 0.124 | 0.275 | 0.311 | 0.318 | 0.261 | 0.164 | |

| 3 | 0.004 | 0.023 | 0.115 | 0.173 | 0.227 | 0.290 | 0.273 | ||

| 4 | 0.003 | 0.029 | 0.058 | 0.097 | 0.194 | 0.273 | |||

| 5 | 0.004 | 0.012 | 0.025 | 0.077 | 0.164 | ||||

| 6 | 0.001 | 0.004 | 0.017 | 0.055 | |||||

| 7 | 0.002 | 0.008 | |||||||

| 8 | 0 | 0.923 | 0.663 | 0.430 | 0.168 | 0.100 | 0.058 | 0.017 | 0.004 |

| 1 | 0.075 | 0.279 | 0.383 | 0.336 | 0.267 | 0.198 | 0.090 | 0.031 | |

| 2 | 0.003 | 0.051 | 0.149 | 0.294 | 0.311 | 0.296 | 0.209 | 0.109 | |

| 3 | 0.005 | 0.033 | 0.147 | 0.208 | 0.254 | 0.279 | 0.219 | ||

| 4 | 0.005 | 0.046 | 0.087 | 0.136 | 0.232 | 0.273 | |||

| 5 | 0.009 | 0.023 | 0.047 | 0.124 | 0.219 | ||||

| 6 | 0.001 | 0.004 | 0.010 | 0.041 | 0.109 | ||||

| 7 | 0.001 | 0.008 | 0.031 | ||||||

| 8 | 0.001 | 0.004 | |||||||

| 9 | 0 | 0.914 | 0.630 | 0.387 | 0.134 | 0.075 | 0.040 | 0.010 | 0.002 |

| 1 | 0.083 | 0.299 | 0.387 | 0.302 | 0.225 | 0.156 | 0.060 | 0.018 | |

| 2 | 0.003 | 0.063 | 0.172 | 0.302 | 0.300 | 0.267 | 0.161 | 0.070 | |

| 3 | 0.008 | 0.045 | 0.176 | 0.234 | 0.267 | 0.251 | 0.164 | ||

| 4 | 0.001 | 0.007 | 0.066 | 0.117 | 0.172 | 0.251 | 0.246 | ||

| 5 | 0.001 | 0.017 | 0.039 | 0.074 | 0.167 | 0.246 | |||

| 6 | 0.003 | 0.009 | 0.021 | 0.074 | 0.164 | ||||

| 7 | 0.001 | 0.004 | 0.021 | 0.070 | |||||

| 8 | 0.004 | 0.018 | |||||||

| 9 | 0.002 | ||||||||

| 10 | 0 | 0.904 | 0.599 | 0.349 | 0.107 | 0.056 | 0.028 | 0.006 | 0.001 |

| 1 | 0.091 | 0.315 | 0.387 | 0.268 | 0.188 | 0.121 | 0.040 | 0.010 | |

| 2 | 0.004 | 0.075 | 0.194 | 0.302 | 0.282 | 0.233 | 0.121 | 0.044 | |

| 3 | 0.010 | 0.057 | 0.201 | 0.250 | 0.267 | 0.215 | 0.117 | ||

| 4 | 0.001 | 0.011 | 0.088 | 0.146 | 0.200 | 0.251 | 0.205 | ||

| 5 | 0.001 | 0.026 | 0.058 | 0.103 | 0.201 | 0.246 | |||

| 6 | 0.006 | 0.016 | 0.037 | 0.111 | 0.205 | ||||

| 7 | 0.001 | 0.003 | 0.009 | 0.042 | 0.117 | ||||

| 8 | 0.001 | 0.011 | 0.044 | ||||||

| 9 | 0.002 | 0.010 | |||||||

| 10 | 0.001 | ||||||||

| 11 | 0 | 0.895 | 0.569 | 0.314 | 0.086 | 0.042 | 0.020 | 0.004 | |

| 1 | 0.099 | 0.329 | 0.384 | 0.236 | 0.155 | 0.093 | 0.027 | 0.005 | |

| 2 | 0.005 | 0.087 | 0.213 | 0.295 | 0.258 | 0.200 | 0.089 | 0.027 | |

| 3 | 0.014 | 0.071 | 0.221 | 0.258 | 0.257 | 0.177 | 0.081 | ||

| 4 | 0.001 | 0.016 | 0.111 | 0.172 | 0.220 | 0.236 | 0.161 | ||

| 5 | 0.002 | 0.039 | 0.080 | 0.132 | 0.221 | 0.226 | |||

| 6 | 0.010 | 0.027 | 0.057 | 0.147 | 0.226 | ||||

| 7 | 0.002 | 0.006 | 0.017 | 0.070 | 0.161 | ||||

| 8 | 0.001 | 0.004 | 0.023 | 0.081 | |||||

| 9 | 0.001 | 0.005 | 0.027 | ||||||

| 10 | 0.001 | 0.005 | |||||||

| 12 | 0 | 0.886 | 0.540 | 0.282 | 0.069 | 0.032 | 0.014 | 0.002 | |

| 1 | 0.107 | 0.341 | 0.377 | 0.206 | 0.127 | 0.071 | 0.017 | 0.003 | |

| 2 | 0.006 | 0.099 | 0.230 | 0.283 | 0.232 | 0.168 | 0.064 | 0.016 | |

| 3 | 0.017 | 0.085 | 0.236 | 0.258 | 0.240 | 0.142 | 0.054 | ||

| 4 | 0.002 | 0.021 | 0.133 | 0.194 | 0.231 | 0.213 | 0.121 | ||

| 5 | 0.004 | 0.053 | 0.103 | 0.158 | 0.227 | 0.193 | |||

| 6 | 0.016 | 0.040 | 0.079 | 0.177 | 0.226 | ||||

| 7 | 0.003 | 0.011 | 0.029 | 0.101 | 0.193 | ||||

| 8 | 0.001 | 0.002 | 0.008 | 0.042 | 0.121 | ||||

| 9 | 0.001 | 0.012 | 0.054 | ||||||

| 10 | 0.002 | 0.016 | |||||||

| 11 | 0.003 | ||||||||

| 15 | 0 | 0.860 | 0.463 | 0.206 | 0.035 | 0.013 | 0.005 | ||

| 1 | 0.130 | 0.366 | 0.343 | 0.132 | 0.067 | 0.031 | 0.005 | ||

| 2 | 0.009 | 0.135 | 0.267 | 0.231 | 0.156 | 0.092 | 0.022 | 0.003 | |

| 3 | 0.031 | 0.129 | 0.250 | 0.225 | 0.170 | 0.063 | 0.014 | ||

| 4 | 0.005 | 0.043 | 0.188 | 0.225 | 0.219 | 0.127 | 0.042 | ||

| 5 | 0.001 | 0.010 | 0.103 | 0.165 | 0.206 | 0.186 | 0.092 | ||

| 6 | 0.002 | 0.043 | 0.092 | 0.147 | 0.207 | 0.153 | |||

| 7 | 0.014 | 0.039 | 0.081 | 0.177 | 0.196 | ||||

| 8 | 0.003 | 0.013 | 0.035 | 0.118 | 0.196 | ||||

| 9 | 0.001 | 0.003 | 0.012 | 0.061 | 0.153 | ||||

| 10 | 0.001 | 0.003 | 0.024 | 0.092 | |||||

| 11 | 0.001 | 0.007 | 0.042 | ||||||

| 12 | 0.002 | 0.014 | |||||||

| 13 | 0.003 | ||||||||

| 20 | 0 | 0.818 | 0.358 | 0.122 | 0.012 | 0.003 | 0.001 | ||

| 1 | 0.165 | 0.377 | 0.270 | 0.058 | 0.021 | 0.007 | |||

| 2 | 0.016 | 0.189 | 0.285 | 0.137 | 0.067 | 0.028 | 0.003 | ||

| 3 | 0.001 | 0.060 | 0.190 | 0.205 | 0.134 | 0.072 | 0.012 | 0.001 | |

| 4 | 0.013 | 0.090 | 0.218 | 0.190 | 0.130 | 0.035 | 0.005 | ||

| 5 | 0.002 | 0.032 | 0.175 | 0.202 | 0.179 | 0.075 | 0.015 | ||

| 6 | 0.009 | 0.109 | 0.169 | 0.192 | 0.124 | 0.037 | |||

| 7 | 0.002 | 0.055 | 0.112 | 0.164 | 0.166 | 0.074 | |||

| 8 | 0.022 | 0.061 | 0.114 | 0.180 | 0.120 | ||||

| 9 | 0.007 | 0.027 | 0.065 | 0.160 | 0.160 | ||||

| 10 | 0.002 | 0.010 | 0.031 | 0.117 | 0.176 | ||||

| 11 | 0.003 | 0.012 | 0.071 | 0.160 | |||||

| 12 | 0.001 | 0.004 | 0.035 | 0.120 | |||||

| 13 | 0.001 | 0.015 | 0.074 | ||||||

| 14 | 0.005 | 0.037 | |||||||

| 15 | 0.001 | 0.015 | |||||||

| 16 | 0.005 | ||||||||

| 17 | 0.001 |

This table gives [asciimath]P(X=x)[/asciimath] for [latex]X\sim\mbox{Binomial}(n,p)[/latex]. For [asciimath]p \ge 0.50[/asciimath] reverse your question by counting failures instead of successes.

Cumulative Binomial distribution

| [latex]n[/latex] | [latex]x[/latex] | 0.01 | 0.05 | 0.10 | 0.20 | 0.25 | 0.30 | 0.40 | 0.50 |

|---|---|---|---|---|---|---|---|---|---|

| 1 | 1 | 0.010 | 0.050 | 0.100 | 0.200 | 0.250 | 0.300 | 0.400 | 0.500 |

| 2 | 1 | 0.020 | 0.098 | 0.190 | 0.360 | 0.438 | 0.510 | 0.640 | 0.750 |

| 2 | 0.003 | 0.010 | 0.040 | 0.062 | 0.090 | 0.160 | 0.250 | ||

| 3 | 1 | 0.030 | 0.143 | 0.271 | 0.488 | 0.578 | 0.657 | 0.784 | 0.875 |

| 2 | 0.007 | 0.028 | 0.104 | 0.156 | 0.216 | 0.352 | 0.500 | ||

| 3 | 0.001 | 0.008 | 0.016 | 0.027 | 0.064 | 0.125 | |||

| 4 | 1 | 0.039 | 0.185 | 0.344 | 0.590 | 0.684 | 0.760 | 0.870 | 0.938 |

| 2 | 0.001 | 0.014 | 0.052 | 0.181 | 0.262 | 0.348 | 0.525 | 0.688 | |

| 3 | 0.004 | 0.027 | 0.051 | 0.084 | 0.179 | 0.312 | |||

| 4 | 0.002 | 0.004 | 0.008 | 0.026 | 0.062 | ||||

| 5 | 1 | 0.049 | 0.226 | 0.410 | 0.672 | 0.763 | 0.832 | 0.922 | 0.969 |

| 2 | 0.001 | 0.023 | 0.081 | 0.263 | 0.367 | 0.472 | 0.663 | 0.812 | |

| 3 | 0.001 | 0.009 | 0.058 | 0.104 | 0.163 | 0.317 | 0.500 | ||

| 4 | 0.007 | 0.016 | 0.031 | 0.087 | 0.188 | ||||

| 5 | 0.001 | 0.002 | 0.010 | 0.031 | |||||

| 6 | 1 | 0.059 | 0.265 | 0.469 | 0.738 | 0.822 | 0.882 | 0.953 | 0.984 |

| 2 | 0.001 | 0.033 | 0.114 | 0.345 | 0.466 | 0.580 | 0.767 | 0.891 | |

| 3 | 0.002 | 0.016 | 0.099 | 0.169 | 0.256 | 0.456 | 0.656 | ||

| 4 | 0.001 | 0.017 | 0.038 | 0.070 | 0.179 | 0.344 | |||

| 5 | 0.002 | 0.005 | 0.011 | 0.041 | 0.109 | ||||

| 6 | 0.001 | 0.004 | 0.016 | ||||||

| 7 | 1 | 0.068 | 0.302 | 0.522 | 0.790 | 0.867 | 0.918 | 0.972 | 0.992 |

| 2 | 0.002 | 0.044 | 0.150 | 0.423 | 0.555 | 0.671 | 0.841 | 0.938 | |

| 3 | 0.004 | 0.026 | 0.148 | 0.244 | 0.353 | 0.580 | 0.773 | ||

| 4 | 0.003 | 0.033 | 0.071 | 0.126 | 0.290 | 0.500 | |||

| 5 | 0.005 | 0.013 | 0.029 | 0.096 | 0.227 | ||||

| 6 | 0.001 | 0.004 | 0.019 | 0.062 | |||||

| 7 | 0.002 | 0.008 | |||||||

| 8 | 1 | 0.077 | 0.337 | 0.570 | 0.832 | 0.900 | 0.942 | 0.983 | 0.996 |

| 2 | 0.003 | 0.057 | 0.187 | 0.497 | 0.633 | 0.745 | 0.894 | 0.965 | |

| 3 | 0.006 | 0.038 | 0.203 | 0.321 | 0.448 | 0.685 | 0.855 | ||

| 4 | 0.005 | 0.056 | 0.114 | 0.194 | 0.406 | 0.637 | |||

| 5 | 0.010 | 0.027 | 0.058 | 0.174 | 0.363 | ||||

| 6 | 0.001 | 0.004 | 0.011 | 0.050 | 0.145 | ||||

| 7 | 0.001 | 0.009 | 0.035 | ||||||

| 8 | 0.001 | 0.004 | |||||||

| 9 | 1 | 0.086 | 0.370 | 0.613 | 0.866 | 0.925 | 0.960 | 0.990 | 0.998 |

| 2 | 0.003 | 0.071 | 0.225 | 0.564 | 0.700 | 0.804 | 0.929 | 0.980 | |

| 3 | 0.008 | 0.053 | 0.262 | 0.399 | 0.537 | 0.768 | 0.910 | ||

| 4 | 0.001 | 0.008 | 0.086 | 0.166 | 0.270 | 0.517 | 0.746 | ||

| 5 | 0.001 | 0.020 | 0.049 | 0.099 | 0.267 | 0.500 | |||

| 6 | 0.003 | 0.010 | 0.025 | 0.099 | 0.254 | ||||

| 7 | 0.001 | 0.004 | 0.025 | 0.090 | |||||

| 8 | 0.004 | 0.020 | |||||||

| 9 | 0.002 | ||||||||

| 10 | 1 | 0.096 | 0.401 | 0.651 | 0.893 | 0.944 | 0.972 | 0.994 | 0.999 |

| 2 | 0.004 | 0.086 | 0.264 | 0.624 | 0.756 | 0.851 | 0.954 | 0.989 | |

| 3 | 0.012 | 0.070 | 0.322 | 0.474 | 0.617 | 0.833 | 0.945 | ||

| 4 | 0.001 | 0.013 | 0.121 | 0.224 | 0.350 | 0.618 | 0.828 | ||

| 5 | 0.002 | 0.033 | 0.078 | 0.150 | 0.367 | 0.623 | |||

| 6 | 0.006 | 0.020 | 0.047 | 0.166 | 0.377 | ||||

| 7 | 0.001 | 0.004 | 0.011 | 0.055 | 0.172 | ||||

| 8 | 0.002 | 0.012 | 0.055 | ||||||

| 9 | 0.002 | 0.011 | |||||||

| 10 | 0.001 | ||||||||

| 11 | 1 | 0.105 | 0.431 | 0.686 | 0.914 | 0.958 | 0.980 | 0.996 | 1.000 |

| 2 | 0.005 | 0.102 | 0.303 | 0.678 | 0.803 | 0.887 | 0.970 | 0.994 | |

| 3 | 0.015 | 0.090 | 0.383 | 0.545 | 0.687 | 0.881 | 0.967 | ||

| 4 | 0.002 | 0.019 | 0.161 | 0.287 | 0.430 | 0.704 | 0.887 | ||

| 5 | 0.003 | 0.050 | 0.115 | 0.210 | 0.467 | 0.726 | |||

| 6 | 0.012 | 0.034 | 0.078 | 0.247 | 0.500 | ||||

| 7 | 0.002 | 0.008 | 0.022 | 0.099 | 0.274 | ||||

| 8 | 0.001 | 0.004 | 0.029 | 0.113 | |||||

| 9 | 0.001 | 0.006 | 0.033 | ||||||

| 10 | 0.001 | 0.006 | |||||||

| 12 | 1 | 0.114 | 0.460 | 0.718 | 0.931 | 0.968 | 0.986 | 0.998 | 1.000 |

| 2 | 0.006 | 0.118 | 0.341 | 0.725 | 0.842 | 0.915 | 0.980 | 0.997 | |

| 3 | 0.020 | 0.111 | 0.442 | 0.609 | 0.747 | 0.917 | 0.981 | ||

| 4 | 0.002 | 0.026 | 0.205 | 0.351 | 0.507 | 0.775 | 0.927 | ||

| 5 | 0.004 | 0.073 | 0.158 | 0.276 | 0.562 | 0.806 | |||

| 6 | 0.001 | 0.019 | 0.054 | 0.118 | 0.335 | 0.613 | |||

| 7 | 0.004 | 0.014 | 0.039 | 0.158 | 0.387 | ||||

| 8 | 0.001 | 0.003 | 0.009 | 0.057 | 0.194 | ||||

| 9 | 0.002 | 0.015 | 0.073 | ||||||

| 10 | 0.003 | 0.019 | |||||||

| 11 | 0.003 | ||||||||

| 15 | 1 | 0.140 | 0.537 | 0.794 | 0.965 | 0.987 | 0.995 | 1.000 | 1.000 |

| 2 | 0.010 | 0.171 | 0.451 | 0.833 | 0.920 | 0.965 | 0.995 | 1.000 | |

| 3 | 0.036 | 0.184 | 0.602 | 0.764 | 0.873 | 0.973 | 0.996 | ||

| 4 | 0.005 | 0.056 | 0.352 | 0.539 | 0.703 | 0.909 | 0.982 | ||

| 5 | 0.001 | 0.013 | 0.164 | 0.314 | 0.485 | 0.783 | 0.941 | ||

| 6 | 0.002 | 0.061 | 0.148 | 0.278 | 0.597 | 0.849 | |||

| 7 | 0.018 | 0.057 | 0.131 | 0.390 | 0.696 | ||||

| 8 | 0.004 | 0.017 | 0.050 | 0.213 | 0.500 | ||||

| 9 | 0.001 | 0.004 | 0.015 | 0.095 | 0.304 | ||||

| 10 | 0.001 | 0.004 | 0.034 | 0.151 | |||||

| 11 | 0.001 | 0.009 | 0.059 | ||||||

| 12 | 0.002 | 0.018 | |||||||

| 13 | 0.004 | ||||||||

| 20 | 1 | 0.182 | 0.642 | 0.878 | 0.988 | 0.997 | 0.999 | 1.000 | 1.000 |

| 2 | 0.017 | 0.264 | 0.608 | 0.931 | 0.976 | 0.992 | 0.999 | 1.000 | |

| 3 | 0.001 | 0.075 | 0.323 | 0.794 | 0.909 | 0.965 | 0.996 | 1.000 | |

| 4 | 0.016 | 0.133 | 0.589 | 0.775 | 0.893 | 0.984 | 0.999 | ||

| 5 | 0.003 | 0.043 | 0.370 | 0.585 | 0.762 | 0.949 | 0.994 | ||

| 6 | 0.011 | 0.196 | 0.383 | 0.584 | 0.874 | 0.979 | |||

| 7 | 0.002 | 0.087 | 0.214 | 0.392 | 0.750 | 0.942 | |||

| 8 | 0.032 | 0.102 | 0.228 | 0.584 | 0.868 | ||||

| 9 | 0.010 | 0.041 | 0.113 | 0.404 | 0.748 | ||||

| 10 | 0.003 | 0.014 | 0.048 | 0.245 | 0.588 | ||||

| 11 | 0.001 | 0.004 | 0.017 | 0.128 | 0.412 | ||||

| 12 | 0.001 | 0.005 | 0.057 | 0.252 | |||||

| 13 | 0.001 | 0.021 | 0.132 | ||||||

| 14 | 0.006 | 0.058 | |||||||

| 15 | 0.002 | 0.021 | |||||||

| 16 | 0.006 | ||||||||

| 17 | 0.001 |

This table gives [latex]P(X \ge x)[/latex] for [latex]X\sim\mbox{Binomial}(n,p)[/latex]. These probabilities will give quicker and more accurate results than can be obtained by summing the individual probabilities from the previous table. For [latex]p \ge 0.50[/latex] reverse your question by counting failures instead of successes.